Scaling: Difference between revisions

No edit summary |

|||

| (20 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

'''This guide provides a comprehensive overview of performance tuning and scaling for VoIPmonitor. It covers the three primary system bottlenecks and offers practical, expert-level advice for optimizing your deployment for high traffic loads.''' | |||

== Understanding Performance Bottlenecks == | |||

A VoIPmonitor deployment's maximum capacity is determined by three potential bottlenecks. Identifying and addressing the correct one is key to achieving high performance. | |||

# '''Packet Capturing (CPU & Network Stack):''' The ability of a single CPU core to read packets from the network interface. This is often the first limit you will encounter. | |||

# '''Disk I/O (Storage):''' The speed at which the sensor can write PCAP files to disk. This is critical when call recording is enabled. | |||

# '''Database Performance (MySQL/MariaDB):''' The rate at which the database can ingest Call Detail Records (CDRs) and serve data to the GUI. | |||

VoIPmonitor | On a modern, well-tuned server (e.g., 24-core Xeon, 10Gbit NIC), a single VoIPmonitor instance can handle up to '''10,000 concurrent calls''' with full RTP analysis and recording, or over '''60,000 concurrent calls''' with SIP-only analysis. | ||

== 1. Optimizing Packet Capturing (CPU & Network) == | |||

The most performance-critical task is the initial packet capture, which is handled by a single, highly optimized thread (t0). If this thread's CPU usage (`t0CPU` in logs) approaches 100%, you are hitting the capture limit. Here are the primary methods to optimize it, from easiest to most advanced. | |||

=== A. Use a Modern Linux Kernel & VoIPmonitor Build === | |||

Modern Linux kernels (3.2+) and VoIPmonitor builds include '''TPACKET_V3''' support, a high-speed packet capture mechanism. This is the single most important factor for high performance. | |||

* '''Recommendation:''' Always use a recent Linux distribution (like AlmaLinux, Rocky Linux, or Debian) and the latest VoIPmonitor static binary. With this combination, a standard Intel 10Gbit NIC can often handle up to 2 Gbit/s of VoIP traffic without special drivers. | |||

= | === B. Network Stack & Driver Tuning === | ||

For high-traffic environments (>500 Mbit/s), fine-tuning the network driver and kernel parameters is essential. | |||

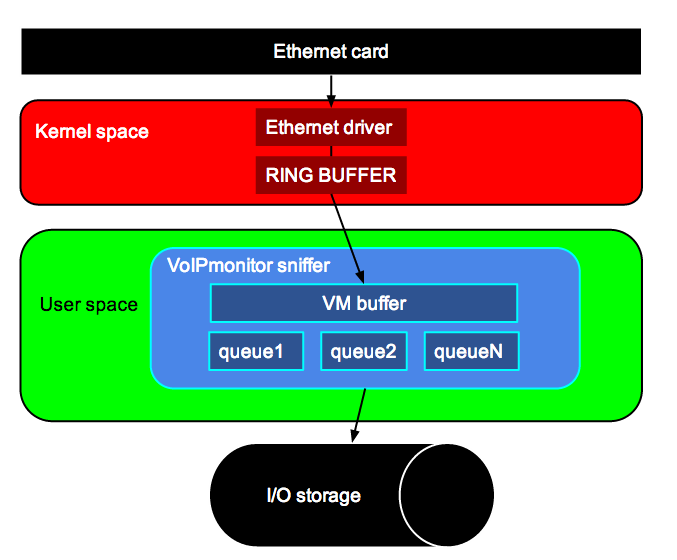

== | ==== NIC Ring Buffer ==== | ||

The ring buffer is a queue between the network card driver and the VoIPmonitor application. A larger buffer prevents packet loss during short CPU usage spikes. | |||

# '''Check maximum size:''' | |||

#<pre>ethtool -g eth0</pre> | |||

# '''Set to maximum (e.g., 16384):''' | |||

#<pre>ethtool -G eth0 rx 16384</pre> | |||

==== Interrupt Coalescing ==== | |||

This setting batches multiple hardware interrupts into one, reducing CPU overhead. | |||

#<pre>ethtool -C eth0 rx-usecs 1022</pre> | |||

==== Applying Settings Persistently ==== | |||

To make these settings permanent, add them to your network configuration. For Debian/Ubuntu using `/etc/network/interfaces`: | |||

<pre> | |||

auto eth0 | |||

iface eth0 inet manual | |||

up ip link set $IFACE up | |||

up ip link set $IFACE promisc on | |||

up ethtool -G $IFACE rx 16384 | |||

up ethtool -C $IFACE rx-usecs 1022 | |||

</pre> | |||

''Note: Modern systems using NetworkManager or systemd-networkd require different configuration methods.'' | |||

=== C. Advanced Offloading and Kernel-Bypass Solutions === | |||

If kernel and driver tuning are insufficient, you can offload the capture process entirely by bypassing the kernel's network stack. | |||

* '''DPDK (Data Plane Development Kit):''' DPDK is a set of libraries and drivers for fast packet processing. VoIPmonitor can leverage DPDK to read packets directly from the network card, completely bypassing the kernel and significantly reducing CPU overhead. This is a powerful, open-source solution for achieving multi-gigabit capture rates on commodity hardware. For detailed installation and configuration instructions, see the [[DPDK|official DPDK guide]]. | |||

* '''PF_RING ZC/DNA:''' A commercial software driver from ntop.org that also dramatically reduces CPU load by bypassing the kernel. In tests, it can reduce CPU usage from 90% to as low as 20% for the same traffic load. | |||

* '''Napatech SmartNICs:''' Specialized hardware acceleration cards that deliver packets to VoIPmonitor with near-zero CPU overhead (<3% CPU for 10 Gbit/s traffic). This is the ultimate solution for extreme performance requirements. | |||

== 2. Optimizing Disk I/O == | |||

VoIPmonitor's modern storage engine is highly optimized to minimize random disk access, which is the primary cause of I/O bottlenecks. | |||

=== VoIPmonitor Storage Strategy === | |||

Instead of writing a separate PCAP file for each call (which causes massive I/O load), VoIPmonitor groups all calls starting within the same minute into a single compressed `.tar` archive. This changes the I/O pattern from thousands of small, random writes to a few large, sequential writes, reducing IOPS (I/O Operations Per Second) by a factor of 10 or more. A standard 7200 RPM SATA drive can typically handle up to 2000 concurrent calls with full recording. | |||

=== Filesystem Tuning (ext4) === | |||

For the spool directory (`/var/spool/voipmonitor`), using an optimized ext4 filesystem can improve performance. | |||

*'''Example setup:''' | |||

<pre> | |||

# Format partition without a journal (use with caution, requires battery-backed RAID controller) | |||

mke2fs -t ext4 -O ^has_journal /dev/sda2 | |||

# Add to /etc/fstab for optimal performance | |||

/dev/sda2 /var/spool/voipmonitor ext4 errors=remount-ro,noatime,data=writeback,barrier=0 0 0 | |||

</pre> | |||

=== RAID Controller Cache Policy === | |||

A misconfigured RAID controller is a common bottleneck. For database and spool workloads, the cache policy should always be set to '''WriteBack''', not WriteThrough. This requires a healthy Battery Backup Unit (BBU). If the BBU is dead or missing, you may need to force this setting. | |||

* ''The specific commands vary by vendor (`megacli`, `ssacli`, `perccli`). Refer to the original, more detailed version of this article or vendor documentation for specific commands for LSI, HP, and Dell controllers.'' | |||

== 3. Optimizing Database Performance (MySQL/MariaDB) == | |||

A well-tuned database is critical for both data ingestion from the sensor and responsiveness of the GUI. | |||

=== Key Configuration Parameters === | |||

These settings should be placed in your `my.cnf` or a file in `/etc/mysql/mariadb.conf.d/`. | |||

* '''`innodb_buffer_pool_size`''': '''This is the most important setting.''' It defines the amount of memory InnoDB uses to cache both data and indexes. A good starting point is 50-70% of the server's total available RAM. For a dedicated database server, 8GB is a good minimum, with 32GB or more being optimal for large databases. | |||

( | * '''`innodb_flush_log_at_trx_commit = 2`''': The default value of `1` forces a write to disk for every single transaction, which is very slow without a high-end, battery-backed RAID controller. Setting it to `2` relaxes this, flushing logs to the OS cache and writing to disk once per second. This dramatically improves write performance with a minimal risk of data loss (max 1-2 seconds) in case of a full OS crash. | ||

* '''`innodb_file_per_table = 1`''': This instructs InnoDB to store each table and its indexes in its own `.ibd` file, rather than in one giant, monolithic `ibdata1` file. This is essential for performance, management, and features like table compression and partitioning. | |||

* '''LZ4 Compression:''' For modern MySQL/MariaDB versions, using LZ4 for page compression offers a great balance of reduced storage size and minimal CPU overhead. | |||

<pre> | |||

# In my.cnf [mysqld] section | |||

innodb_compression_algorithm=lz4 | |||

# For MariaDB, you may also need to set default table formats: | |||

# mysqlcompress_type = PAGE_COMPRESSED=1 in voipmonitor.conf | |||

</pre> | |||

=== Database Partitioning === | |||

Partitioning is a feature where VoIPmonitor automatically splits large tables (like `cdr`) into smaller, more manageable pieces, typically one per day. This is enabled by default and is '''highly recommended'''. | |||

* '''Benefits:''' | |||

* Massively improves query performance in the GUI, as it only needs to scan partitions relevant to the selected time range. | |||

* Allows for instant deletion of old data by dropping a partition, which is thousands of times faster than running a `DELETE` query on millions of rows. | |||

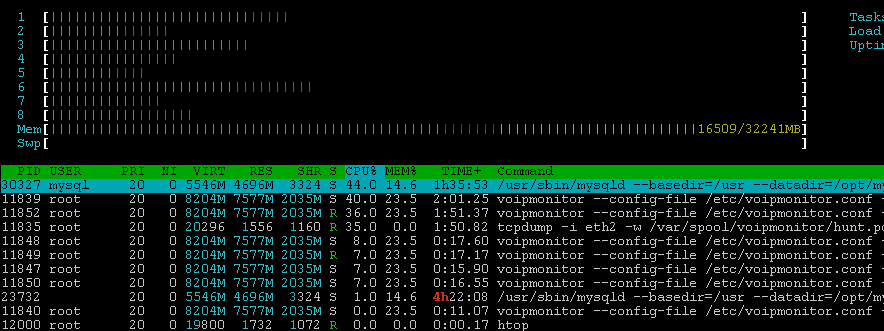

== 4. Monitoring Live Performance == | |||

VoIPmonitor logs detailed performance metrics every 10 seconds to syslog. You can watch them live: | |||

<pre> | |||

tail -f /var/log/syslog # Debian/Ubuntu | |||

tail -f /var/log/messages # CentOS/RHEL | |||

</pre> | |||

A sample log line: | |||

<code>voipmonitor[15567]: calls[315][355] PS[C:4 S:29/29 R:6354 A:6484] SQLq[0] heap[0|0|0] comp[54] [12.6Mb/s] t0CPU[5.2%] ... RSS/VSZ[323|752]MB</code> | |||

* '''`calls[X][Y]`''': X = active calls, Y = total calls in memory. | |||

* '''`SQLq[C]`''': Number of SQL queries waiting to be sent to the database. If this number is consistently growing, your database cannot keep up. | |||

* '''`heap[A|B|C]`''': Memory usage percentages for internal buffers. If A (main heap) reaches 100%, packets will be dropped. | |||

* '''`t0CPU[X%]`''': '''The most important CPU metric.''' This is the usage of the main packet capture thread. If it consistently exceeds 90-95%, you are at your server's capture limit. | |||

*calls | |||

* | |||

heap[A|B|C] | |||

* | |||

[[File:kernelstandarddiagram.png]] | [[File:kernelstandarddiagram.png]] | ||

[[File:ntop.png]] | [[File:ntop.png]] | ||

== | == AI Summary for RAG == | ||

'''Summary:''' This article is an expert guide to scaling and performance tuning VoIPmonitor for high-traffic environments. It identifies the three main system bottlenecks: Packet Capturing (CPU-bound), Disk I/O (for PCAP storage), and Database Performance (MySQL/MariaDB). For packet capture, it details tuning network card drivers with `ethtool`, the benefits of modern kernels with `TPACKET_V3`, and advanced kernel-bypass solutions like DPDK, PF_RING, and Napatech SmartNICs. For I/O, it explains VoIPmonitor's efficient TAR-based storage and provides tuning tips for ext4 filesystems and RAID controller cache policies. The largest section focuses on MySQL/MariaDB tuning, emphasizing the importance of `innodb_buffer_pool_size`, `innodb_flush_log_at_trx_commit`, `innodb_file_per_table`, and native LZ4 compression. It also explains the critical role of database partitioning for query performance and data retention. Finally, it details how to interpret live performance statistics from the syslog to diagnose bottlenecks. | |||

'''Keywords:''' performance tuning, scaling, high throughput, bottleneck, CPU bound, t0CPU, packet capture, TPACKET_V3, DPDK, ethtool, ring buffer, interrupt coalescing, PF_RING, Napatech, I/O bottleneck, IOPS, filesystem tuning, ext4, RAID cache, WriteBack, megacli, ssacli, MySQL performance, MariaDB tuning, innodb_buffer_pool_size, innodb_flush_log_at_trx_commit, innodb_file_per_table, LZ4 compression, database partitioning, syslog, monitoring, high calls per second, CPS | |||

'''Key Questions:''' | |||

* How do I scale VoIPmonitor for thousands of concurrent calls? | |||

* What are the main performance bottlenecks in VoIPmonitor? | |||

* How can I fix high t0CPU usage? | |||

* What is DPDK and when should I use it? | |||

* What are the best `my.cnf` settings for a high-performance VoIPmonitor database? | |||

* How does database partitioning work and why is it important? | |||

* My sniffer is dropping packets, how do I fix it? | |||

* How do I interpret the performance metrics in the syslog? | |||

* Should I use a dedicated database server for VoIPmonitor? | |||

Latest revision as of 09:53, 30 June 2025

This guide provides a comprehensive overview of performance tuning and scaling for VoIPmonitor. It covers the three primary system bottlenecks and offers practical, expert-level advice for optimizing your deployment for high traffic loads.

Understanding Performance Bottlenecks

A VoIPmonitor deployment's maximum capacity is determined by three potential bottlenecks. Identifying and addressing the correct one is key to achieving high performance.

- Packet Capturing (CPU & Network Stack): The ability of a single CPU core to read packets from the network interface. This is often the first limit you will encounter.

- Disk I/O (Storage): The speed at which the sensor can write PCAP files to disk. This is critical when call recording is enabled.

- Database Performance (MySQL/MariaDB): The rate at which the database can ingest Call Detail Records (CDRs) and serve data to the GUI.

On a modern, well-tuned server (e.g., 24-core Xeon, 10Gbit NIC), a single VoIPmonitor instance can handle up to 10,000 concurrent calls with full RTP analysis and recording, or over 60,000 concurrent calls with SIP-only analysis.

1. Optimizing Packet Capturing (CPU & Network)

The most performance-critical task is the initial packet capture, which is handled by a single, highly optimized thread (t0). If this thread's CPU usage (`t0CPU` in logs) approaches 100%, you are hitting the capture limit. Here are the primary methods to optimize it, from easiest to most advanced.

A. Use a Modern Linux Kernel & VoIPmonitor Build

Modern Linux kernels (3.2+) and VoIPmonitor builds include TPACKET_V3 support, a high-speed packet capture mechanism. This is the single most important factor for high performance.

- Recommendation: Always use a recent Linux distribution (like AlmaLinux, Rocky Linux, or Debian) and the latest VoIPmonitor static binary. With this combination, a standard Intel 10Gbit NIC can often handle up to 2 Gbit/s of VoIP traffic without special drivers.

B. Network Stack & Driver Tuning

For high-traffic environments (>500 Mbit/s), fine-tuning the network driver and kernel parameters is essential.

NIC Ring Buffer

The ring buffer is a queue between the network card driver and the VoIPmonitor application. A larger buffer prevents packet loss during short CPU usage spikes.

- Check maximum size:

ethtool -g eth0

- Set to maximum (e.g., 16384):

ethtool -G eth0 rx 16384

Interrupt Coalescing

This setting batches multiple hardware interrupts into one, reducing CPU overhead.

ethtool -C eth0 rx-usecs 1022

Applying Settings Persistently

To make these settings permanent, add them to your network configuration. For Debian/Ubuntu using `/etc/network/interfaces`:

auto eth0

iface eth0 inet manual

up ip link set $IFACE up

up ip link set $IFACE promisc on

up ethtool -G $IFACE rx 16384

up ethtool -C $IFACE rx-usecs 1022

Note: Modern systems using NetworkManager or systemd-networkd require different configuration methods.

C. Advanced Offloading and Kernel-Bypass Solutions

If kernel and driver tuning are insufficient, you can offload the capture process entirely by bypassing the kernel's network stack.

- DPDK (Data Plane Development Kit): DPDK is a set of libraries and drivers for fast packet processing. VoIPmonitor can leverage DPDK to read packets directly from the network card, completely bypassing the kernel and significantly reducing CPU overhead. This is a powerful, open-source solution for achieving multi-gigabit capture rates on commodity hardware. For detailed installation and configuration instructions, see the official DPDK guide.

- PF_RING ZC/DNA: A commercial software driver from ntop.org that also dramatically reduces CPU load by bypassing the kernel. In tests, it can reduce CPU usage from 90% to as low as 20% for the same traffic load.

- Napatech SmartNICs: Specialized hardware acceleration cards that deliver packets to VoIPmonitor with near-zero CPU overhead (<3% CPU for 10 Gbit/s traffic). This is the ultimate solution for extreme performance requirements.

2. Optimizing Disk I/O

VoIPmonitor's modern storage engine is highly optimized to minimize random disk access, which is the primary cause of I/O bottlenecks.

VoIPmonitor Storage Strategy

Instead of writing a separate PCAP file for each call (which causes massive I/O load), VoIPmonitor groups all calls starting within the same minute into a single compressed `.tar` archive. This changes the I/O pattern from thousands of small, random writes to a few large, sequential writes, reducing IOPS (I/O Operations Per Second) by a factor of 10 or more. A standard 7200 RPM SATA drive can typically handle up to 2000 concurrent calls with full recording.

Filesystem Tuning (ext4)

For the spool directory (`/var/spool/voipmonitor`), using an optimized ext4 filesystem can improve performance.

- Example setup:

# Format partition without a journal (use with caution, requires battery-backed RAID controller) mke2fs -t ext4 -O ^has_journal /dev/sda2 # Add to /etc/fstab for optimal performance /dev/sda2 /var/spool/voipmonitor ext4 errors=remount-ro,noatime,data=writeback,barrier=0 0 0

RAID Controller Cache Policy

A misconfigured RAID controller is a common bottleneck. For database and spool workloads, the cache policy should always be set to WriteBack, not WriteThrough. This requires a healthy Battery Backup Unit (BBU). If the BBU is dead or missing, you may need to force this setting.

- The specific commands vary by vendor (`megacli`, `ssacli`, `perccli`). Refer to the original, more detailed version of this article or vendor documentation for specific commands for LSI, HP, and Dell controllers.

3. Optimizing Database Performance (MySQL/MariaDB)

A well-tuned database is critical for both data ingestion from the sensor and responsiveness of the GUI.

Key Configuration Parameters

These settings should be placed in your `my.cnf` or a file in `/etc/mysql/mariadb.conf.d/`.

- `innodb_buffer_pool_size`: This is the most important setting. It defines the amount of memory InnoDB uses to cache both data and indexes. A good starting point is 50-70% of the server's total available RAM. For a dedicated database server, 8GB is a good minimum, with 32GB or more being optimal for large databases.

- `innodb_flush_log_at_trx_commit = 2`: The default value of `1` forces a write to disk for every single transaction, which is very slow without a high-end, battery-backed RAID controller. Setting it to `2` relaxes this, flushing logs to the OS cache and writing to disk once per second. This dramatically improves write performance with a minimal risk of data loss (max 1-2 seconds) in case of a full OS crash.

- `innodb_file_per_table = 1`: This instructs InnoDB to store each table and its indexes in its own `.ibd` file, rather than in one giant, monolithic `ibdata1` file. This is essential for performance, management, and features like table compression and partitioning.

- LZ4 Compression: For modern MySQL/MariaDB versions, using LZ4 for page compression offers a great balance of reduced storage size and minimal CPU overhead.

# In my.cnf [mysqld] section innodb_compression_algorithm=lz4 # For MariaDB, you may also need to set default table formats: # mysqlcompress_type = PAGE_COMPRESSED=1 in voipmonitor.conf

Database Partitioning

Partitioning is a feature where VoIPmonitor automatically splits large tables (like `cdr`) into smaller, more manageable pieces, typically one per day. This is enabled by default and is highly recommended.

- Benefits:

* Massively improves query performance in the GUI, as it only needs to scan partitions relevant to the selected time range. * Allows for instant deletion of old data by dropping a partition, which is thousands of times faster than running a `DELETE` query on millions of rows.

4. Monitoring Live Performance

VoIPmonitor logs detailed performance metrics every 10 seconds to syslog. You can watch them live:

tail -f /var/log/syslog # Debian/Ubuntu tail -f /var/log/messages # CentOS/RHEL

A sample log line:

voipmonitor[15567]: calls[315][355] PS[C:4 S:29/29 R:6354 A:6484] SQLq[0] heap[0|0|0] comp[54] [12.6Mb/s] t0CPU[5.2%] ... RSS/VSZ[323|752]MB

- `calls[X][Y]`: X = active calls, Y = total calls in memory.

- `SQLq[C]`: Number of SQL queries waiting to be sent to the database. If this number is consistently growing, your database cannot keep up.

- `heap[A|B|C]`: Memory usage percentages for internal buffers. If A (main heap) reaches 100%, packets will be dropped.

- `t0CPU[X%]`: The most important CPU metric. This is the usage of the main packet capture thread. If it consistently exceeds 90-95%, you are at your server's capture limit.

AI Summary for RAG

Summary: This article is an expert guide to scaling and performance tuning VoIPmonitor for high-traffic environments. It identifies the three main system bottlenecks: Packet Capturing (CPU-bound), Disk I/O (for PCAP storage), and Database Performance (MySQL/MariaDB). For packet capture, it details tuning network card drivers with `ethtool`, the benefits of modern kernels with `TPACKET_V3`, and advanced kernel-bypass solutions like DPDK, PF_RING, and Napatech SmartNICs. For I/O, it explains VoIPmonitor's efficient TAR-based storage and provides tuning tips for ext4 filesystems and RAID controller cache policies. The largest section focuses on MySQL/MariaDB tuning, emphasizing the importance of `innodb_buffer_pool_size`, `innodb_flush_log_at_trx_commit`, `innodb_file_per_table`, and native LZ4 compression. It also explains the critical role of database partitioning for query performance and data retention. Finally, it details how to interpret live performance statistics from the syslog to diagnose bottlenecks. Keywords: performance tuning, scaling, high throughput, bottleneck, CPU bound, t0CPU, packet capture, TPACKET_V3, DPDK, ethtool, ring buffer, interrupt coalescing, PF_RING, Napatech, I/O bottleneck, IOPS, filesystem tuning, ext4, RAID cache, WriteBack, megacli, ssacli, MySQL performance, MariaDB tuning, innodb_buffer_pool_size, innodb_flush_log_at_trx_commit, innodb_file_per_table, LZ4 compression, database partitioning, syslog, monitoring, high calls per second, CPS Key Questions:

- How do I scale VoIPmonitor for thousands of concurrent calls?

- What are the main performance bottlenecks in VoIPmonitor?

- How can I fix high t0CPU usage?

- What is DPDK and when should I use it?

- What are the best `my.cnf` settings for a high-performance VoIPmonitor database?

- How does database partitioning work and why is it important?

- My sniffer is dropping packets, how do I fix it?

- How do I interpret the performance metrics in the syslog?

- Should I use a dedicated database server for VoIPmonitor?