Scaling: Difference between revisions

No edit summary |

No edit summary |

||

| Line 1: | Line 1: | ||

= Introduction = | = Introduction = | ||

Our maximum throughput on single server (24 cores Xeon, 10Gbit NIC card) is around 20 000 calls. But VoIPmonitor can work in cluster mode where remote sensors writes to one central database with central GUI server. Usual 4 core Xeon server (E3-1220) is able to handle up to | Our maximum throughput on single server (24 cores Xeon, 10Gbit NIC card) is around 20 000 calls. But VoIPmonitor can work in cluster mode where remote sensors writes to one central database with central GUI server. Usual 4 core Xeon server (E3-1220) is able to handle up to 6000 simultaneous calls and probably more. | ||

VoIPmonitor is able to use all available CPU cores but there are several bottlenecks which you should consider before deploying and configuring VoIPmonitor. We do free full presale support in case you need help to deploy our solution. | VoIPmonitor is able to use all available CPU cores but there are several bottlenecks which you should consider before deploying and configuring VoIPmonitor. We do free full presale support in case you need help to deploy our solution. | ||

Basically there are three types of bottlenecks - CPU, disk I/O throughput (writing pcap files) and storing CDR to MySQL (I/O or CPU). The sniffer is multithreaded application but certain tasks cannot be split to more threads. Main thread is reading packets from kernel - this is the top most consuming thread and it depends on CPU type and kernel version (and number of packets per second). Below | Basically there are three types of bottlenecks - CPU, disk I/O throughput (writing pcap files) and storing CDR to MySQL (I/O or CPU). The sniffer is multithreaded application but certain tasks cannot be split to more threads. Main thread is reading packets from kernel - this is the top most consuming thread and it depends on CPU type and kernel version (and number of packets per second). Below 500Mbit of traffic you do not need to be worried about CPU on usual CPU (Xeon, i5). More details about CPU bottleneck see following chapter CPU bound. | ||

I/O bottleneck is most common problem for voipmonitor and it depends if you store to local mysql database along with storing pcap files on the same server and the same storage. See next chapter I/O throughput. | I/O bottleneck is most common problem for voipmonitor and it depends if you store to local mysql database along with storing pcap files on the same server and the same storage. See next chapter I/O throughput. | ||

| Line 12: | Line 12: | ||

== Reading packets == | == Reading packets == | ||

Main thread which reads packets from kernel cannot be split into more threads which limits number of concurrent calls for the whole server. CPU used for this thread is equivalent to running "tcpdump -i ethX -w /dev/null" which you can use as a test if your server is able to handle your traffic. Since version 8 there is each 10 seconds output to syslog or to stdout (if running with -k -v 1 switch) which measures how many CPU% takes the thread number 0 which is reading packets from kernel, if this is > | Main thread which reads packets from kernel cannot be split into more threads which limits number of concurrent calls for the whole server. CPU used for this thread is equivalent to running "tcpdump -i ethX -w /dev/null" which you can use as a test if your server is able to handle your traffic. Since version 8 there is each 10 seconds output to syslog or to stdout (if running with -k -v 1 switch) which measures how many CPU% takes the thread number 0 which is reading packets from kernel, if this is >90% it means you need better CPU or upgrade kernel and libpcap or special network cards or DNA ntop.org driver for intel cards). We have tested sniffer on countless type of servers and basically the limit is somewhere around at 800Mbit for usual 1Gbit card and 2.6.32 kernel. New libpcap 1.5.3 and 3.x kernel support TPACKET_V3 which means that you need to compile this libpcap againts recent linux kernel. In our tests we are able to sniff on 10Gbit intel card 1Gbit traffic without special drivers - just using the latest libpcap and kernel. We are not sure yet if upgrading to new kernel and libpcap is enough because the TPACKET_V3 is detected from some /usr/include headers. We have tested this on centos 6.5 and debian 7 which supports TPCAKET_V3 but our statically distributed voipmonitor binaries are compiled on debian 6 for backward compatibility which means that it does not support TPACKET_V3 even on kernels supporting this feature. We have tested centos 6.5 and kernel 3.8 with libpcap 1.5.3 more than 1Gbit without single packet loss which was not possible without TPACKET_V3. | ||

There is also important thing to check for high throughput especially if you use kernel < 3.X which do not balance IRQ from RX NIC interrupts by default. You need to check /proc/interrupts to see if your RX queues are not bound only to CPU0 in case you see in syslog that CPU0 is on 100%. If you are not sure just upgrade your kernel to 3.X and the IRQ balance is by default spread to more CPU automatically. | There is also important thing to check for high throughput especially if you use kernel < 3.X which do not balance IRQ from RX NIC interrupts by default. You need to check /proc/interrupts to see if your RX queues are not bound only to CPU0 in case you see in syslog that CPU0 is on 100%. If you are not sure just upgrade your kernel to 3.X and the IRQ balance is by default spread to more CPU automatically. | ||

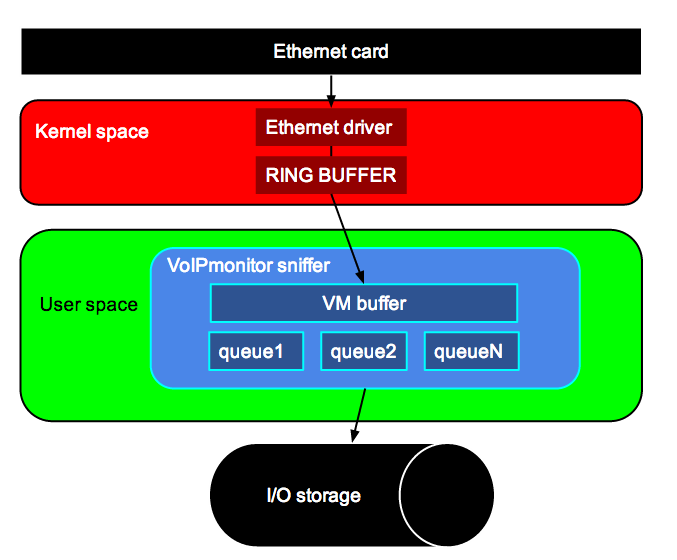

On following picture you can see how packets are proccessed from ethernet card to Kernel space to ethernet driver which queues packets to ring buffer. Ring buffer (available since kernel 2.6.32 and libpcap > 1.0) is read by libpcap library to its own voipmonitor buffer. Kernel ring buffer is circular buffer directly in kernel which reads packets from ethernet card and overwrites the oldest one if not read in time. Ring buffer can be large at maximum 2GB (this is actual limit in libpcap library version 1.3). VoIPmonitor sniffer reads packets from ring buffer (thread T0) and pass packets to dynamic queue allocated on heap memory which can be configured to use as much RAM as you are able to allocate which conceals packet loss when disk I/O spikes occurs. This heap memory can be also compressed which doubles the room for packets but takes some CPU (30% for 1Gbit traffic on newer xeons). If the heap memory is full the sniffer (if enabled) can write packets to files which can be any path - dedicated storage are recommended - this feature is for those who cannot afford to loose single packet or for cases where the sniffer mirrors data to remote sniffer and if the connection breaks for some time the sniffer can write data from heap to temporary files which are sent back once the connection is reestablished. | |||

Another consideration is limit number of rxtx queues on your nic card which is by default number of cores in the system which adds a lot of overhead causing more CPU. two cores are fine with 1Gbit traffic on 10Gbit intel card. This is how you can limit it: | |||

modprobe ixgbe DCA=2,2 RSS=2,2 | |||

You can also buy special DNA drivers from ntop.org and compile their libpcap version which offloads CPU from 90% to 20-40% for 1.5Gbit (thats what we tested). | |||

Another option is to buy Napatech NIC card whith theirs libpcap which offloads CPU to 3% for >1Gbit traffic. | |||

On following picture you can see how packets are proccessed from ethernet card to Kernel space to ethernet driver which queues packets to ring buffer. Ring buffer (available since kernel 2.6.32 and libpcap > 1.0) is read by libpcap library to its own voipmonitor buffer. Kernel ring buffer is circular buffer directly in kernel which reads packets from ethernet card and overwrites the oldest one if not read in time. Ring buffer can be large at maximum 2GB (this is actual limit in libpcap library version 1.3). VoIPmonitor sniffer reads packets from ring buffer (thread T0) and pass packets to dynamic queue allocated on heap memory which can be configured to use as much RAM as you are able to allocate which conceals packet loss when disk I/O spikes occurs. This heap memory can be also compressed which doubles the room for packets but takes some CPU (30% for 1Gbit traffic on newer xeons but it takes 100% cpu for 500Mbit on lower xeons CPU so performance varies). If the heap memory is full the sniffer (if enabled) can write packets to files which can be any path - dedicated storage are recommended - this feature is for those who cannot afford to loose single packet or for cases where the sniffer mirrors data to remote sniffer and if the connection breaks for some time the sniffer can write data from heap to temporary files which are sent back once the connection is reestablished. | |||

Since sniffer 8.4 we have implemented more threading which is not enabled by default. If you have traffic over ~400MBit you should consider to enable it (see [[Sniffer_configuration#threading_mod]]) | Since sniffer 8.4 we have implemented more threading which is not enabled by default. If you have traffic over ~400MBit you should consider to enable it (see [[Sniffer_configuration#threading_mod]]) | ||

| Line 27: | Line 37: | ||

tail -f /var/log/messages (on redhat/centos) | tail -f /var/log/messages (on redhat/centos) | ||

calls[ | voipmonitor[15567]: calls[315][355] PS[C:4 S:29/29 R:6354 A:6484] SQLq[C:0 M:0 Cl:0] heap[0|0|0] comp[54] [12.6Mb/s] t0CPU[5.2%] t1CPU[1.2%] t2CPU[0.9%] tacCPU[4.6|3.0|3.7|4.5%] RSS/VSZ[323|752]MB | ||

*voipmonitor[15567] - 15567 is PID of the process | |||

*calls - [X][Y] - X is actual calls in voipmonitor memory. Y is total calls in voipmonitor memory (actual + queue buffer) | *calls - [X][Y] - X is actual calls in voipmonitor memory. Y is total calls in voipmonitor memory (actual + queue buffer) including SIP register | ||

*PS - call/packet counters per second. C: number of calls / second, S: X/Y - X is number of valid SIP packets / second on sip ports. Y is number of all packets on sip ports. R: number of RTP packets / second of registered calls by voipmonitor per second. A: all packets per second | |||

*SQLqueue - is number of sql statements (INSERTs) waiting to be written to MySQL. If this number is growing the MySQL is not able to handle it. See [[Scaling#innodb_flush_log_at_trx_commit]] | *SQLqueue - is number of sql statements (INSERTs) waiting to be written to MySQL. If this number is growing the MySQL is not able to handle it. See [[Scaling#innodb_flush_log_at_trx_commit]] | ||

heap[A|B|C] - A: % of used heap memory. If 100 voipmonitor is not able to process packets in realtime due to CPU or I/O. B: number of % used memory in packetbuffer. C: number of % used for async write buffers (if 100% I/O is blocking and heap will grow and than ring buffer will get full and then packet loss will occur) | |||

*hoverruns - if this number grows the heap buffer was completely filled. In this case the primary thread will stop reading packets from ringbuffer and if the ringbuffer is full packets will be lost - this occurrence will be logged to syslog. | *hoverruns - if this number grows the heap buffer was completely filled. In this case the primary thread will stop reading packets from ringbuffer and if the ringbuffer is full packets will be lost - this occurrence will be logged to syslog. | ||

*comp - compression buffer ratio (if enabled) | *comp - compression buffer ratio (if enabled) | ||

*[12.6Mb/s] - total network throughput | |||

*t0CPU - This is %CPU utilization for thread 0. Thread 0 is process reading from kernel ring buffer. Once it is over 90% it means that the current setup is hitting limit processing packets from network card. Please write to support@voipmonitor.org if you hit this limit. | *t0CPU - This is %CPU utilization for thread 0. Thread 0 is process reading from kernel ring buffer. Once it is over 90% it means that the current setup is hitting limit processing packets from network card. Please write to support@voipmonitor.org if you hit this limit. | ||

*t1CPU - This is %CPU utilization for thread 1. Thread 1 is process reading packets from thread 0, adding it to the buffer and compress it (if enabled). | *t1CPU - This is %CPU utilization for thread 1. Thread 1 is process reading packets from thread 0, adding it to the buffer and compress it (if enabled). | ||

*t2CPU - This is %CPU utilization for thread 2. Thread 2 is process which parses all SIP packets. If >90% there the sensor is hitting limit - please contact support@voipmonitor.org if you see >90%. | *t2CPU - This is %CPU utilization for thread 2. Thread 2 is process which parses all SIP packets. If >90% there the sensor is hitting limit - please contact support@voipmonitor.org if you see >90%. | ||

* | *RSS/VSZ[323|752]MB - RSS stands for the resident size, which is an accurate representation of how much actual physical memory sniffer is consuming. VSZ stands for the virtual size of a process, which is the sum of memory it is actually using, memory it has mapped into itself (for instance the video card’s RAM for the X server), files on disk that have been mapped into it (most notably shared libraries), and memory shared with other processes. VIRT represents how much memory the program is able to access at the present moment. | ||

[[File:kernelstandarddiagram.png]] | [[File:kernelstandarddiagram.png]] | ||

| Line 48: | Line 60: | ||

=== Software driver alternatives === | === Software driver alternatives === | ||

*TPACKET_V3 - New libpcap 1.5.3 and 3.x kernel support TPACKET_V3 which means that you need to compile this libpcap againts recent linux kernel. In our tests we are able to sniff on 10Gbit intel card 1Gbit traffic without special drivers - just using the latest libpcap and kernel. | |||

*Direct NIC Access http://www.ntop.org/products/pf_ring/dna/ - We have tried DNA driver for stock 1Gbit Intel card which reduces 100% CPU load to 20%. | |||

*[[Pcap_worksheet]] | *[[Pcap_worksheet]] | ||

=== Hardware NIC cards === | === Hardware NIC cards === | ||

| Line 65: | Line 73: | ||

= I/O bottleneck = | = I/O bottleneck = | ||

For storing up to | For storing up to 200 simultaneous calls (with all SIP and RTP packets saving) you do not need to be worried about I/O performance much. For storing up to 500 calls your disk must have enabled write cache (some raid controllers are not set well for random write scenarios or has write cache disabled at all). Especially kvm virtual default settings does not use write-back cache. For up to 1000 calls you can use ordinary SATA 7.2kRPM disks with NCQ enabled - like Western digital RE4 edition (RE4 is important as it implements good NCQ) and we use it for installations for saving full SIP+RTP up to 1000 simultaneous calls. If you have more than 1000 simultaneous calls you can still use usual SATA disk but using cachedir feature (see below) or you need to look for some enterprise hardware raid and test the performance before you buy! Performance of such raids varies a lot and there is no general recommendation or working solutions which we can provide as a reference. | ||

Since version 10 the sniffer is compressing pcap files by default using asynchronous write queue which can be set to huge numbers - GB of RAM to help overcome I/O bottleneck and peaks. | |||

SSD disks are not recommended for pcap storing because of its low durability. | SSD disks are not recommended for pcap storing because of its low durability. | ||

Revision as of 15:19, 1 July 2014

Introduction

Our maximum throughput on single server (24 cores Xeon, 10Gbit NIC card) is around 20 000 calls. But VoIPmonitor can work in cluster mode where remote sensors writes to one central database with central GUI server. Usual 4 core Xeon server (E3-1220) is able to handle up to 6000 simultaneous calls and probably more.

VoIPmonitor is able to use all available CPU cores but there are several bottlenecks which you should consider before deploying and configuring VoIPmonitor. We do free full presale support in case you need help to deploy our solution.

Basically there are three types of bottlenecks - CPU, disk I/O throughput (writing pcap files) and storing CDR to MySQL (I/O or CPU). The sniffer is multithreaded application but certain tasks cannot be split to more threads. Main thread is reading packets from kernel - this is the top most consuming thread and it depends on CPU type and kernel version (and number of packets per second). Below 500Mbit of traffic you do not need to be worried about CPU on usual CPU (Xeon, i5). More details about CPU bottleneck see following chapter CPU bound.

I/O bottleneck is most common problem for voipmonitor and it depends if you store to local mysql database along with storing pcap files on the same server and the same storage. See next chapter I/O throughput.

CPU bound

Reading packets

Main thread which reads packets from kernel cannot be split into more threads which limits number of concurrent calls for the whole server. CPU used for this thread is equivalent to running "tcpdump -i ethX -w /dev/null" which you can use as a test if your server is able to handle your traffic. Since version 8 there is each 10 seconds output to syslog or to stdout (if running with -k -v 1 switch) which measures how many CPU% takes the thread number 0 which is reading packets from kernel, if this is >90% it means you need better CPU or upgrade kernel and libpcap or special network cards or DNA ntop.org driver for intel cards). We have tested sniffer on countless type of servers and basically the limit is somewhere around at 800Mbit for usual 1Gbit card and 2.6.32 kernel. New libpcap 1.5.3 and 3.x kernel support TPACKET_V3 which means that you need to compile this libpcap againts recent linux kernel. In our tests we are able to sniff on 10Gbit intel card 1Gbit traffic without special drivers - just using the latest libpcap and kernel. We are not sure yet if upgrading to new kernel and libpcap is enough because the TPACKET_V3 is detected from some /usr/include headers. We have tested this on centos 6.5 and debian 7 which supports TPCAKET_V3 but our statically distributed voipmonitor binaries are compiled on debian 6 for backward compatibility which means that it does not support TPACKET_V3 even on kernels supporting this feature. We have tested centos 6.5 and kernel 3.8 with libpcap 1.5.3 more than 1Gbit without single packet loss which was not possible without TPACKET_V3.

There is also important thing to check for high throughput especially if you use kernel < 3.X which do not balance IRQ from RX NIC interrupts by default. You need to check /proc/interrupts to see if your RX queues are not bound only to CPU0 in case you see in syslog that CPU0 is on 100%. If you are not sure just upgrade your kernel to 3.X and the IRQ balance is by default spread to more CPU automatically.

Another consideration is limit number of rxtx queues on your nic card which is by default number of cores in the system which adds a lot of overhead causing more CPU. two cores are fine with 1Gbit traffic on 10Gbit intel card. This is how you can limit it:

modprobe ixgbe DCA=2,2 RSS=2,2

You can also buy special DNA drivers from ntop.org and compile their libpcap version which offloads CPU from 90% to 20-40% for 1.5Gbit (thats what we tested).

Another option is to buy Napatech NIC card whith theirs libpcap which offloads CPU to 3% for >1Gbit traffic.

On following picture you can see how packets are proccessed from ethernet card to Kernel space to ethernet driver which queues packets to ring buffer. Ring buffer (available since kernel 2.6.32 and libpcap > 1.0) is read by libpcap library to its own voipmonitor buffer. Kernel ring buffer is circular buffer directly in kernel which reads packets from ethernet card and overwrites the oldest one if not read in time. Ring buffer can be large at maximum 2GB (this is actual limit in libpcap library version 1.3). VoIPmonitor sniffer reads packets from ring buffer (thread T0) and pass packets to dynamic queue allocated on heap memory which can be configured to use as much RAM as you are able to allocate which conceals packet loss when disk I/O spikes occurs. This heap memory can be also compressed which doubles the room for packets but takes some CPU (30% for 1Gbit traffic on newer xeons but it takes 100% cpu for 500Mbit on lower xeons CPU so performance varies). If the heap memory is full the sniffer (if enabled) can write packets to files which can be any path - dedicated storage are recommended - this feature is for those who cannot afford to loose single packet or for cases where the sniffer mirrors data to remote sniffer and if the connection breaks for some time the sniffer can write data from heap to temporary files which are sent back once the connection is reestablished.

Since sniffer 8.4 we have implemented more threading which is not enabled by default. If you have traffic over ~400MBit you should consider to enable it (see Sniffer_configuration#threading_mod)

Jitterbuffer simulater uses a lot of CPU and you can disable all three type of jitterbuffers if your server is not able to handle it (parameters are jitterbuffer_f1, jitterbuffer_f2, jitterbuffer_adapt). If you need to disable one of the jitterbuffer keep jitterbuffer_f2 enabled which is the most usefull. Jitterbuffer runs in threads and by default number of threads equals to number of cores.

if voipmonitor sniffer is running with at least "-v 1" you can watch several metrics:

tail -f /var/log/syslog (on debian/ubuntu) tail -f /var/log/messages (on redhat/centos)

voipmonitor[15567]: calls[315][355] PS[C:4 S:29/29 R:6354 A:6484] SQLq[C:0 M:0 Cl:0] heap[0|0|0] comp[54] [12.6Mb/s] t0CPU[5.2%] t1CPU[1.2%] t2CPU[0.9%] tacCPU[4.6|3.0|3.7|4.5%] RSS/VSZ[323|752]MB

- voipmonitor[15567] - 15567 is PID of the process

- calls - [X][Y] - X is actual calls in voipmonitor memory. Y is total calls in voipmonitor memory (actual + queue buffer) including SIP register

- PS - call/packet counters per second. C: number of calls / second, S: X/Y - X is number of valid SIP packets / second on sip ports. Y is number of all packets on sip ports. R: number of RTP packets / second of registered calls by voipmonitor per second. A: all packets per second

- SQLqueue - is number of sql statements (INSERTs) waiting to be written to MySQL. If this number is growing the MySQL is not able to handle it. See Scaling#innodb_flush_log_at_trx_commit

heap[A|B|C] - A: % of used heap memory. If 100 voipmonitor is not able to process packets in realtime due to CPU or I/O. B: number of % used memory in packetbuffer. C: number of % used for async write buffers (if 100% I/O is blocking and heap will grow and than ring buffer will get full and then packet loss will occur)

- hoverruns - if this number grows the heap buffer was completely filled. In this case the primary thread will stop reading packets from ringbuffer and if the ringbuffer is full packets will be lost - this occurrence will be logged to syslog.

- comp - compression buffer ratio (if enabled)

- [12.6Mb/s] - total network throughput

- t0CPU - This is %CPU utilization for thread 0. Thread 0 is process reading from kernel ring buffer. Once it is over 90% it means that the current setup is hitting limit processing packets from network card. Please write to support@voipmonitor.org if you hit this limit.

- t1CPU - This is %CPU utilization for thread 1. Thread 1 is process reading packets from thread 0, adding it to the buffer and compress it (if enabled).

- t2CPU - This is %CPU utilization for thread 2. Thread 2 is process which parses all SIP packets. If >90% there the sensor is hitting limit - please contact support@voipmonitor.org if you see >90%.

- RSS/VSZ[323|752]MB - RSS stands for the resident size, which is an accurate representation of how much actual physical memory sniffer is consuming. VSZ stands for the virtual size of a process, which is the sum of memory it is actually using, memory it has mapped into itself (for instance the video card’s RAM for the X server), files on disk that have been mapped into it (most notably shared libraries), and memory shared with other processes. VIRT represents how much memory the program is able to access at the present moment.

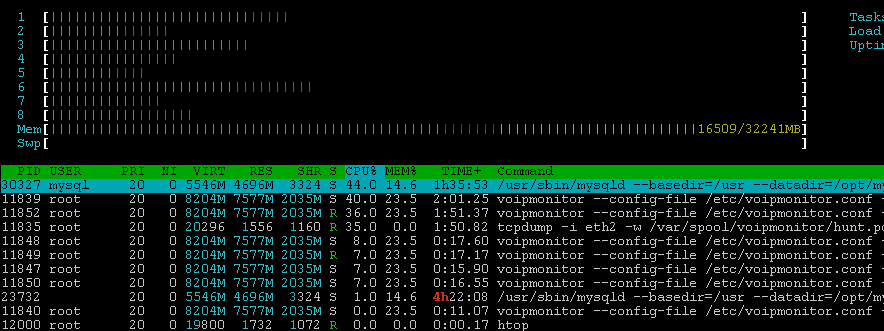

Good tool for measuring CPU is http://htop.sourceforge.net/

Software driver alternatives

- TPACKET_V3 - New libpcap 1.5.3 and 3.x kernel support TPACKET_V3 which means that you need to compile this libpcap againts recent linux kernel. In our tests we are able to sniff on 10Gbit intel card 1Gbit traffic without special drivers - just using the latest libpcap and kernel.

- Direct NIC Access http://www.ntop.org/products/pf_ring/dna/ - We have tried DNA driver for stock 1Gbit Intel card which reduces 100% CPU load to 20%.

Hardware NIC cards

We have succesfully tested 1Gbit and 10Gbit cards from Napatech which delivers packets to voipmonitor at 0% CPU.

I/O bottleneck

For storing up to 200 simultaneous calls (with all SIP and RTP packets saving) you do not need to be worried about I/O performance much. For storing up to 500 calls your disk must have enabled write cache (some raid controllers are not set well for random write scenarios or has write cache disabled at all). Especially kvm virtual default settings does not use write-back cache. For up to 1000 calls you can use ordinary SATA 7.2kRPM disks with NCQ enabled - like Western digital RE4 edition (RE4 is important as it implements good NCQ) and we use it for installations for saving full SIP+RTP up to 1000 simultaneous calls. If you have more than 1000 simultaneous calls you can still use usual SATA disk but using cachedir feature (see below) or you need to look for some enterprise hardware raid and test the performance before you buy! Performance of such raids varies a lot and there is no general recommendation or working solutions which we can provide as a reference.

Since version 10 the sniffer is compressing pcap files by default using asynchronous write queue which can be set to huge numbers - GB of RAM to help overcome I/O bottleneck and peaks.

SSD disks are not recommended for pcap storing because of its low durability.

VoIPmonitor sniffer produces the worst case scenario for spin disks - random write. The situation gets worse in case of ext3/ext4 file systems which uses journal and writes meta data enabled by default thus adding more I/O writes. But ext4 can be tweaked to get maximum performance disabling journal and some other tweaks in cost of readability in case of system crash. We are recommending to use dedicated disk and format it with special ext4 switches. If you cannot use dedicated disk for storing pcap files use dedicated partition formatted with special tweaks (see below).

The fastest filesystem for voipmonitor spool directory is EXT4 with following tweaks. Assuming your partition is /dev/sda2:

export mydisk=/dev/sda2 mke2fs -t ext4 -O ^has_journal $mydisk tune2fs -O ^has_journal $mydisk tune2fs -o journal_data_writeback $mydisk #add following line to /etc/fstab /dev/sda2 /var/spool/voipmonitor ext4 errors=remount-ro,noatime,nodiratime,data=writeback,barrier=0 0 0

In case your disk is still not able to handle traffic you can enable cachedir feature (voipmonitor.conf:cachedir) which stores all files into fast storage which can handle random write - for example RAM disk located at /dev/shm (every linux distribution have enabled this for up to 50% of memory). After the file is closed (call ends) voipmonitor automatically move the file from this storage to spooldir directory which is located on slower storage in guaranteed serial order which eliminates random write problem. This also allows to use network shares which is usually too slow to use it for writing directly to it by voipmonitor sniffer.

MySQL performance

Write performance

Write performance depends a lot if a storage is also used for pcap storing (thus sharing I/O with voipmonitor) and on how mysql handles writes (innodb_flush_log_at_trx_commit parameter - see below). Since sniffer version 6 MySQL tables uses compression which doubles write and read performance almost with no trade cost on CPU (well it depends on CPU type and ammount of traffic).

innodb_flush_log_at_trx_commit

Default value of 1 will mean each update transaction commit (or each statement outside of transaction) will need to flush log to the disk which is rather expensive, especially if you do not have Battery backed up cache. Many applications are OK with value 2 which means do not flush log to the disk but only flush it to OS cache. The log is still flushed to the disk each second so you normally would not loose more than 1-2 sec worth of updates. Value 0 is a bit faster but is a bit less secure as you can lose transactions even in case MySQL Server crashes. Value 2 only cause data loss with full OS crash. If you are importing or altering cdr table it is strongly recommended to set temporarily innodb_flush_log_at_trx_commit = 0 and turn off binlog if you are importing CDR via inserts.

innodb_flush_log_at_trx_commit = 2

compression

MySQL 5.1

set in my.cf in [global] section this value:

innodb_file_per_table = 1

MySQL > 5.1

MySQL> set global innodb_file_per_table = 1; MySQL> set global innodb_file_format = barracuda;

Tune KEY_BLOCK_SIZE

If you choose KEY_BLOCK_SIZE=2 instead of 8 the compression will be twice better but with CPU penalty on read. We have tested differences between no compression, 8kb and 2kb block size compression on 700 000 CDR with this result (on single core system – we do not know how it behaves on multi core systems). Testing query is select with group by.

No compression – 1.6 seconds 8kb - 1.7 seconds 4kb - 8 seconds

Read performance

Read performance depends how big the database is and how fast disk operates and how much memory is allocated for innodb cache. Since sniffer version 7 all large tables uses partitioning by days which reduces needs to allocate very large cache to get good performance for the GUI. Partitioning works since MySQL 5.1 and is highly recommended. It also allows instantly removes old data by wiping partition instead of DELETE rows which can take hours on very large tables (millions of rows).

innodb_buffer_pool_size

This is very important variable to tune if you’re using Innodb tables. Innodb tables are much more sensitive to buffer size compared to MyISAM. MyISAM may work kind of OK with default key_buffer_size even with large data set but it will crawl with default innodb_buffer_pool_size. Also Innodb buffer pool caches both data and index pages so you do not need to leave space for OS cache so values up to 70-80% of memory often make sense for Innodb only installations.

We recommend to set this value to 50% of your available RAM. 2GB at least, 8GB is optimal. All depends how many CDR do you have per day.

innodb_buffer_pool_size = 8GB

Partitioning

Partitioning is enabled by default since version 7. If you want to take benefit of it (which we strongly recommend) you need to start with clean database - there is no conversion procedure from old database to partitioned one. Just create new database and start voipmonitor with new database and partitioning will be created. You can turn off partitioning by setting cdr_partition = no in voipmonitor.conf

.