Alerts

Alerts & Reports

Alerts & Reports generate email notifications based on QoS parameters or SIP error conditions. The system includes daily reports, ad hoc reports, and stores all generated items in history.

Overview

The alert system monitors call quality and SIP signaling in real-time, triggering notifications when configured thresholds are exceeded.

Email Configuration Prerequisites

Emails are sent using PHP's mail() function, which relies on the server's Mail Transfer Agent (MTA) such as Exim, Postfix, or Sendmail. Configure your MTA according to your Linux distribution documentation.

Setting the Email "From" Address

To configure the "From" address that appears in outgoing alert emails:

- Navigate to

- GUI > Settings > System Configuration > Email / HTTP Referer

- Locate the field

- DEFAULT_EMAIL_FROM (Default "From" address for outgoing emails)

- Set your desired email address (e.g.,

alerts@yourcompany.com)

This setting applies to all automated emails sent by VoIPmonitor, including:

- QoS alerts (RTP, SIP response, sensors)

- Daily reports

- License notifications

Setting Up the Cron Job

Alert processing requires a cron job that runs every minute:

# Add to /etc/crontab (adjust path based on your GUI installation)

echo "* * * * * root php /var/www/html/php/run.php cron" >> /etc/crontab

# Reload crontab

killall -HUP cron # Debian/Ubuntu

# or

killall -HUP crond # CentOS/RHEL

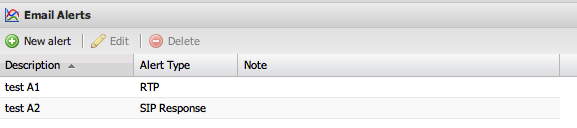

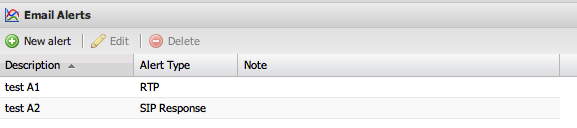

Configure Alerts

Email alerts can trigger on SIP protocol events or RTP QoS metrics. Access alerts configuration via GUI > Alerts.

Alert Types

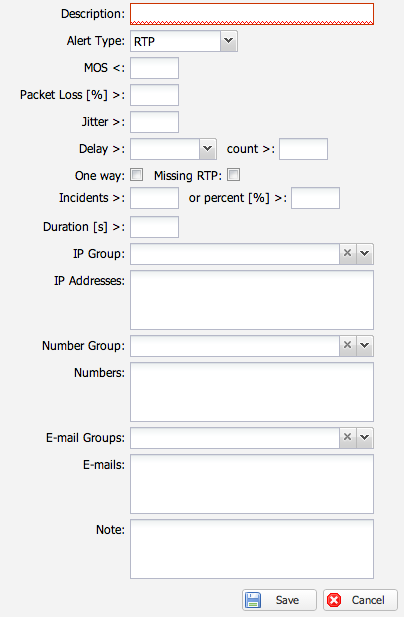

RTP Alerts

RTP alerts trigger based on voice quality metrics:

- MOS (Mean Opinion Score) - below threshold

- Packet loss - percentage exceeded

- Jitter - variation exceeded

- Delay (PDV) - latency exceeded

- One-way calls - answered but one RTP stream missing

- Missing RTP - answered but both RTP streams missing

Configure alerts to trigger when:

- Number of incidents exceeds a set value, OR

- Percentage of CDRs exceeds a threshold

RTP&CDR Alerts

RTP&CDR alerts combine RTP quality metrics with CDR-based conditions, including Post Dial Delay (PDD). These alerts are useful for monitoring call setup performance and detecting network latency issues.

Available Conditions:

In the filter-common tab, you can configure conditions including:

- PDD (Post Dial Delay) - Time between sending INVITE and receiving final response. Configure with comparison operators like

PDD > 5(in seconds) to alert on long call setup delays. This can also detect routing loops where looping calls continuously retransmit INVITE without receiving responses (PDD will be very large).

In the base config tab:

- Set the recipient email address for alert notifications

- Consider limiting the max-lines in body to prevent oversized emails when many CDRs match the alert condition

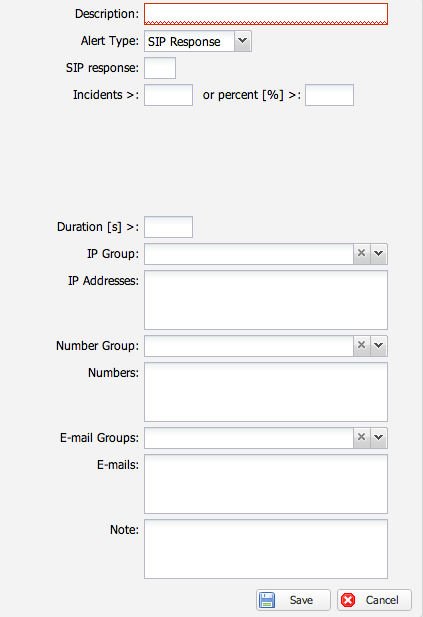

SIP Response Alerts

SIP response alerts trigger based on SIP response codes:

- Empty response field: Matches all call attempts per configured filters

- Response code 0: Matches unreplied INVITE requests (no response received). This is useful for detecting routing loops where calls continuously loop and never receive any SIP response.

- Specific codes: Match exact codes like 404, 503, etc.

Percentage Alerts and the "from all" Checkbox

SIP response alerts can trigger based either on the number of incidents or the percentage of CDRs exceeding a threshold.

When setting a percentage threshold (e.g., >10%):

- "from all" checkbox CHECKED: The percentage is calculated from ALL CDRs in the database (not just those matching filters).

- "from all" checkbox UNCHECKED: The percentage is calculated only from CDRs that match your common filters (IP groups, numbers, etc.). This is the correct setting when monitoring a specific IP group.

Example: Monitor 503 responses for a specific IP group

To alert when the percentage of SIP 503 responses from the "Datora" IP group exceeds 10%:

1. Navigate to GUI > Alerts > filter common subtab 2. In IP/Number Group, select your group (e.g., "Datora") 3. UNCHECK "from all" - this ensures the percentage is calculated only from CDRs involving IPs in the Datora group 4. In the Base config subtab:

* Set Type to SIP Response * Set Response code to503* Set Incidents threshold to>10%

5. Configure recipient emails and save

If you leave "from all" CHECKED, the alert would calculate the 503 percentage across ALL CDRs in your database, which defeats the purpose of monitoring a specific IP group.

Detecting 408 Request Timeout Failures

A 408 Request Timeout response occurs when the caller sends multiple INVITE retransmissions and receives no final response. This is useful for alerting on calls that timeout after the UAS (User Agent Server) sends a provisional response like 100 Trying but then fails to send any further responses.

Use Cases:

- Detect failing PBX or SBC (Session Border Controller) instances that accept calls but stop processing

- Monitor network failures where SIP messages stop flowing after initial dialog establishment

- Identify servers that become unresponsive mid-call setup

Configuration:

1. Navigate to GUI > Alerts

2. Create new alert with type SIP Response

3. Set Response code to 408

4. Optionally add Common Filters (IP addresses, numbers) to narrow scope

5. Save the alert

Understanding the Difference Between Response Code 0 and 408:

- 'Response code 0: Matches calls that received absolutely no response (not even a 100 Trying). These are network or reachability issues.

- Response code 408: Matches calls that received at least one provisional response (like 100 Trying) but eventually timed out. These indicate a server or application layer problem where the UAS stopped responding after initial acknowledgment.

Note: When a call times out with a 408 response, the CDR stores 408 as the Last SIP Response. Alerting on 408 will catch all call setup timeouts, including those where a 100 Trying was initially received.

Sensors Alerts

Sensors alerts monitor the health of VoIPmonitor probes and sniffer instances. This is the most reliable method to check if remote sensors are online and actively monitoring traffic.

Unlike simple network port monitoring (which may show a port as open even if the process is frozen or unresponsive), sensors alerts verify that the sensor instance is actively communicating with the VoIPmonitor GUI server.

- Setup

-

- Configure sensors in Settings > Sensors

- Create a sensors alert to be notified when a probe goes offline or becomes unresponsive

Sensor Health Monitoring Conditions

In addition to detecting offline sensors, you can configure sensors alert conditions to monitor sensor performance issues:

Conditions you can configure:

- Old CDR - Alerts when the sensor has not written CDRs to the database recently. This indicates the sensor is either not capturing calls, or there is a database insertion bottleneck preventing CDRs from being committed.

- Big SQL queue stat - Alerts when the SQL cache queue is growing. The SQL queue represents CDRs waiting in memory to be written to the database. A growing queue indicates the database cannot keep up with write operations.

SQL Queue Threshold Guidance:

The SQL queue is measured in the number of cache files waiting. A healthy sensor should maintain a low SQL count.

- Normal operation: SQL queue should remain near 0 during all traffic conditions.

- Warning level: SQL queue above 20 files indicates a significant delay between packet capture and database insertion.

- Critical level: SQL queue above 100 files indicates severe database performance issues requiring immediate attention.

When configuring a "Big SQL queue stat" alert, setting the threshold to 20 files provides early warning before the problem escalates to critical levels.

Configuring Alert Actions:

When a sensor health alert triggers, you can configure the following actions:

- Email notification - Send alerts to administrators via email.

- External script execution - Execute a custom script with arguments about the triggering sensor and condition. This enables integration with monitoring systems like Nagios or Zabbix, or automated remediation workflows.

The external script receives information about which specific sensor triggered the alert and which health condition was violated, allowing you to build automated responses tailored to the type of failure.

SIP REGISTER RRD Beta Alerts

The SIP REGISTER RRD beta alert type monitors SIP REGISTER response times and alerts when REGISTER packets do not receive a response within a specified threshold (in milliseconds). This is useful for detecting network latency issues, packet loss, or failing switches that cause SIP retransmissions.

This alert serves as an effective proxy to monitor for registration issues, as REGISTER retransmissions often indicate problems with network connectivity or unresponsive SIP servers.

- Configuration

-

- Navigate to GUI > Alerts

- Create a new alert with type SIP REGISTER RRD beta

- Set the response time threshold in milliseconds (e.g., alert if REGISTER does not receive a response within 2000ms)

- Configure recipient email addresses

- Save the alert configuration

The system monitors REGISTER packets and triggers an alert when responses exceed the configured threshold, indicating potential SIP registration failures or network issues.

SIP Failed Register Beta Alerts

The SIP failed Register (beta) alert type detects SIP registration floods from a single IP address using multiple different usernames. This is a common attack pattern used in brute-force or credential-stuffing attacks where the attacker tries many different usernames from one source IP to find valid credentials.

Unlike the basic "SIP REGISTER flood" alert (which counts total registration attempts regardless of success/failure or username), this alert specifically monitors failed registrations and aggregates them by source IP address to detect patterns that indicate credential-guessing attacks.

- Use Cases

-

- Detect credential-stuffing attacks (one IP trying many different usernames in brute-force attempts)

- Identify botnets attempting account takeovers by cycling through username lists

- Monitor for registration abuse patterns that may indicate dictionary attacks

- Alert administrators when an IP shows signs of attempting unauthorized access to SIP accounts

- How It Works

This alert triggers when the total number of failed SIP registrations from any single IP address exceeds a specified threshold within a configured time interval. By focusing on failed registrations and grouping by source IP, it catches floods that use a variety of usernames from the same source.

- Configuration

-

- Navigate to GUI > Alerts

- Create a new alert with type SIP failed Register (beta)

- Set the threshold - maximum number of failed registrations allowed from a single IP (e.g., 20 failed registrations)

- Set the interval - time window in seconds to evaluate (e.g., 60 seconds to check for 20 failed registrations in one minute)

- Configure recipient email addresses

- Optionally add Common Filters (IP addresses, numbers) to narrow scope

- Save the alert configuration

- Example Scenario

An attacker attempts to brute-force SIP credentials by sending REGISTER requests with 50 different usernames from IP 203.0.113.50 within 60 seconds. All 50 attempts fail because the credentials are invalid.

If you configure the alert with threshold=20 and interval=60 seconds, the system will:

- Detect 50 failed registrations from 203.0.113.50 within 60 seconds

- Compare (50 failed) > (threshold 20) = TRUE

- Trigger an alert notifying administrators about the potential registration flood attack from IP 203.0.113.50

- Comparison with Other Registration Alerts

| Alert Type | What It Monitors | Attack Detection |

|---|---|---|

| SIP failed Register (beta) | Failed registrations grouped by IP | Brute-force, credential stuffing |

| SIP REGISTER RRD beta | REGISTER response times | Network latency, packet loss |

| multiple register (beta) | Same account from multiple IPs | Compromised credentials, misuse |

| Realtime REGISTER flood | Total REGISTER attempts (any status) from IP | Flood/spam of any registration |

The SIP failed Register (beta) alert is specifically optimized to detect attacks that use many different usernames from a single IP, which is the hallmark of credential-guessing or dictionary attacks. Use this alert in combination with other anti-fraud rules like Anti-Fraud Rules for comprehensive registration attack detection.

CDR Trends Alerts

The CDR trends alert type enables trend-based monitoring and alerting on aggregated CDR statistics, including ASR (Answer Seizure Ratio) and other metrics. This alert type compares current performance against historical baselines and triggers notifications when metrics deviate beyond configurable thresholds.

Use Cases:

- Monitor ASR drops or increases over time windows

- Detect sudden changes in call volume patterns

- Compare current hour/day/week against historical data

- Identify quality degradation trends before they become critical

Configuration Parameters:

| Parameter | Description | Example Values |

|---|---|---|

| Type | The metric to monitor for trend changes | ASR (Answer Seizure Ratio), Call count, ACD, etc. |

| Offset | Historical baseline period to compare against | 1 week, 1 day, 1 month |

| Range | Current time window to evaluate | 1 hour, 1 day, 1 week |

| Method | Calculation method for trend comparison | Deviation (detects % change), Threshold (absolute value) |

| Limit Inc./Limit Dec. | Percentage threshold for triggering alerts | 10%, 15%, 20% |

| IP whitelist | Optional filter to limit scope to specific IPs/agents | Source IP addresses or user agents |

Example Configuration - ASR Trend Alert:

To receive an alert when ASR drops by 10% compared to the previous week:

1. Navigate to GUI > Alerts 2. Create new alert with type CDR trends 3. Configure parameters:

- Type: ASR

- Offset: 1 week (compare current period to previous week)

- Range: 1 hour (evaluate hourly)

- Method: Deviation (percentage-based comparison)

- Limit Dec.: 10% (trigger when drop exceeds 10%)

- IP whitelist: (optional) specify specific test user agents or IP addresses

4. Set recipient email addresses 5. Save the alert configuration

How Deviation Method Works:

When using the Deviation method, the system calculates:

Deviation % = ((Current Value - Historical Baseline) / Historical Baseline) * 100

- Limit Inc. triggers when Deviation % > threshold (e.g., ASR increased by 15%)

- Limit Dec. triggers when Deviation % < -threshold (e.g., ASR decreased by 10%)

A 10% ASR drop means current ASR is 90% of the historical baseline.

Understanding Offset vs Range:

- Offset defines the historical reference period (e.g., "1 week" means "same hour last week")

- Range defines the current evaluation window (e.g., "1 hour" means "current hour's ASR")

For example, with Offset=1 week and Range=1 hour, the system compares ASR for "today 09:00-10:00" against "last week 09:00-10:00".

Multiple Register Beta Alerts

The multiple register (beta) alert type detects SIP accounts that are registered from multiple different IP addresses. This is useful for identifying potential security issues, configuration errors, or unauthorized use of SIP credentials.

This alert specifically finds phone numbers or SIP accounts that have registered from more than one distinct IP address within the monitored timeframe.

- Use Cases

-

- Detect SIP account compromise (credential theft leading to registrations from unauthorized IPs)

- Identify configuration issues where phones are registering from multiple networks unexpectedly

- Monitor for roaming behavior when multi-IP registration is not expected

- Audit SIP account usage across distributed environments

- Configuration

-

- Navigate to GUI > Alerts

- Create a new alert with type multiple register (beta)

- Configure recipient email addresses

- Optionally add Common Filters (IP addresses, numbers) to narrow scope - leave filters empty to check ALL SIP numbers/accounts across all customers

- Save the alert configuration

- Alert Scope

-

- With filters: Monitors only the specific IP addresses, numbers, or groups defined in the Common Filters section

- Without filters: Monitors all SIP numbers/accounts across all customers in your system

The alert will trigger whenever it detects a SIP account that has registered from multiple distinct IP addresses, providing details about the affected account(s) and the IP addresses observed.

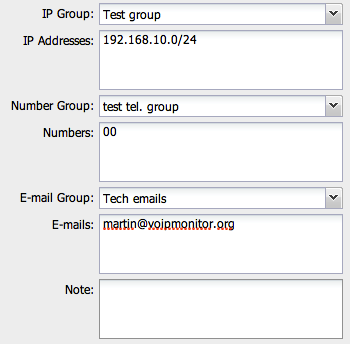

Common Filters

All alert types support the following filters:

| Filter | Description |

|---|---|

| IP/Number Group | Apply alert to predefined groups (from Groups menu) |

| IP Addresses | Individual IPs or ranges (one per line) |

| Numbers | Individual phone numbers or prefixes (one per line) |

| Email Group | Send alerts to group-defined email addresses |

| Emails | Individual recipient emails (one per line) |

| External script | Path to custom script to execute when alert triggers (see below) |

Using External Scripts for Alert Actions

Beyond email notifications, alerts can execute custom scripts when triggered. This enables integration with third-party systems (webhooks, Datadog, Slack, custom monitoring tools) without sending emails.

Configuration

1. Navigate to GUI > Alerts

2. Create or edit an alert (RTP, SIP Response, Sensors, etc.)

3. In the configuration form, locate the External script field

4. Enter the full path to your custom script (e.g., /usr/local/bin/alert-webhook.sh)

5. Save the alert configuration

The script will execute immediately when the alert triggers.

Script Arguments

The custom script receives alert data as command-line arguments. The format is identical to anti-fraud scripts (see Anti-Fraud Rules):

| Argument | Description |

|---|---|

$1 |

Alert ID (numeric identifier) |

$2 |

Alert name/type |

$3 |

Unix timestamp of alert trigger |

$4 |

JSON-encoded alert data |

Alert Data Structure

The JSON in the fourth argument contains CDR IDs affected by the alert:

{

"cdr": [12345, 12346, 12347],

"alert_type": "MOS below threshold",

"threshold": 3.5,

"actual_value": 2.8

}

Use the cdr array to query additional information from the database if needed.

Example: Send Webhook to Datadog

This bash script sends an alert notification to a Datadog webhook API:

#!/bin/bash

# /usr/local/bin/datadog-alert.sh

# Configuration

WEBHOOK_URL="https://webhook.site/your-custom-url"

DATADOG_API_KEY="your-datadog-api-key"

# Parse arguments

ALERT_ID="$1"

ALERT_NAME="$2"

TIMESTAMP="$3"

ALERT_DATA="$4"

# Convert Unix timestamp to readable date

DATE=$(date -d "@$TIMESTAMP" '+%Y-%m-%d %H:%M:%S')

# Extract relevant data from JSON

cdrCount=$(echo "$ALERT_DATA" | jq -r '.cdr | length')

threshold=$(echo "$ALERT_DATA" | jq -r '.threshold // empty')

actualValue=$(echo "$ALERT_DATA" | jq -r '.actual_value // empty')

# Build webhook payload

PAYLOAD=$(cat <<EOF

{

"alert_id": "$ALERT_ID",

"alert_name": "$ALERT_NAME",

"triggered_at": "$DATE",

"cdr_count": $cdrCount,

"threshold": $threshold,

"actual_value": $actualValue,

"source": "voipmonitor"

}

EOF

)

# Send webhook

curl -X POST "$WEBHOOK_URL" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $DATADOG_API_KEY" \

-d "$PAYLOAD"

Make the script executable:

chmod +x /usr/local/bin/datadog-alert.sh

Example: Send Slack Notification

#!/bin/bash

# /usr/local/bin/slack-alert.sh

SLACK_WEBHOOK="https://hooks.slack.com/services/YOUR/WEBHOOK/URL"

ALERT_NAME="$2"

ALERT_DATA="$4"

cdrCount=$(echo "$ALERT_DATA" | jq -r '.cdr | length')

curl -X POST "$SLACK_WEBHOOK" \

-H "Content-Type: application/json" \

-d '{

"text": "VoIPmonitor Alert: '"$ALERT_NAME"'",

"attachments": [{

"color": "danger",

"fields": [

{"title": "CDRs affected", "value": "'"$cdrCount"'"}

]

}]

}'

Example: Store Alert Details in File

#!/bin/bash

# /usr/local/bin/log-alert.sh

LOG_DIR="/var/log/voipmonitor-alerts"

mkdir -p "$LOG_DIR"

# Log all arguments for debugging

echo "=== Alert triggered at $(date) ===" >> "$LOG_DIR/alerts.log"

echo "Alert ID: $1" >> "$LOG_DIR/alerts.log"

echo "Alert name: $2" >> "$LOG_DIR/alerts.log"

echo "Timestamp: $3" >> "$LOG_DIR/alerts.log"

echo "Data: $4" >> "$LOG_DIR/alerts.log"

echo "" >> "$LOG_DIR/alerts.log"

Example: Access Source IP Addresses

When querying the CDR database from an alert script, IP addresses are stored as decimal integers in the cdr table. To convert them to human-readable dotted-decimal format (e.g., 185.107.80.4), use either PHP's long2ip() function or MySQL's INET_NTOA() function.

Using PHP's long2ip() (Recommended for Post-Processing)

If you fetch the raw integer value from the database and convert it in your script:

#!/usr/bin/php

<?php

// Parse alert data

$alert = json_decode($argv[4]);

$cdrIds = implode(',', $alert->cdr);

// Query the CDR table - note: sipcallerip is a decimal integer

$query = "SELECT id, sipcallerip, sipcalledip

FROM voipmonitor.cdr

WHERE id IN ($cdrIds)";

$command = "mysql -h MYSQLHOST -u MYSQLUSER -pMYSQLPASS -N -e \"$query\"";

exec($command, $results);

// Process results and convert IP addresses

foreach ($results as $line) {

list($id, $callerIP, $calledIP) = preg_split('/\t/', trim($line));

// Convert decimal integer to dotted-decimal format

$callerIPFormatted = long2ip($callerIP);

$calledIPFormatted = long2ip($calledIP);

echo "CDR ID $id: Caller IP $callerIPFormatted, Called IP $calledIPFormatted\n";

// Example: long2ip(3110817796) returns "185.107.80.4"

}

?>

Using MySQL's INET_NTOA() (Recommended for Database Queries)

If you prefer to handle conversion in the SQL query itself:

#!/usr/bin/php

<?php

// Parse alert data

$alert = json_decode($argv[4]);

$cdrIds = implode(',', $alert->cdr);

// Query with IP conversion done in MySQL

$query = "SELECT id, INET_NTOA(sipcallerip) as caller_ip, INET_NTOA(sipcalledip) as called_ip

FROM voipmonitor.cdr

WHERE id IN ($cdrIds)";

$command = "mysql -h MYSQLHOST -u MYSQLUSER -pMYSQLPASS -N -e \"$query\"";

exec($command, $results);

// Process results - IPs are already formatted

foreach ($results as $line) {

list($id, $callerIP, $calledIP) = preg_split('/\t/', trim($line));

echo "CDR ID $id: Caller IP $callerIP, Called IP $calledIP\n";

}

?>

Common IP Columns in CDR Table

The following columns contain IP addresses (all stored as decimal integers):

| Column | Description |

|---|---|

sipcallerip |

SIP signaling source IP |

sipcalledip |

SIP signaling destination IP |

rtpsrcipX |

RTP source IP for stream X (where X = 0-9) |

rtpdstipX |

RTP destination IP for stream X (where X = 0-9) |

Troubleshooting IP Format Issues

If your alert script receives IP addresses as large numbers (e.g., 3110817796 instead of 185.107.80.4):

1. Verify you are querying the cdr table directly (not using formatted variables)

2. Use long2ip() in PHP or INET_NTOA() in MySQL to convert the value

3. Check that the column is not already being converted by another layer of the application

For reference:

long2ip(3110817796)returns185.107.80.4long2ip(3232255785)returns192.168.1.101long2ip(2130706433)returns127.0.0.1

Important Notes

- IP Address Format: IP addresses in the

cdrtable are stored as decimal integers. Uselong2ip()(PHP) orINET_NTOA()(MySQL) to convert to dotted-decimal format. - Script execution time: The alert processor waits for the script to complete. Keep scripts fast (under 5 seconds) or run them in the background if processing takes longer.

- Script permissions: Ensure the script is executable by the web server user (typically

www-dataorapache). - Error handling: Script failures are logged but do not prevent email alerts from being sent.

- Querying CDRs: The script receives CDR IDs in the JSON data. Query the

cdrtable to retrieve detailed information like caller numbers, call duration, etc. - Security: Validate input before using it in commands or database queries to prevent injection attacks.

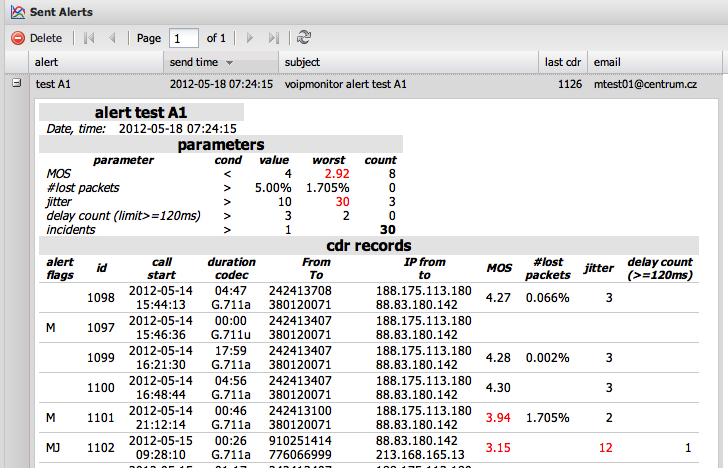

Sent Alerts

All triggered alerts are saved in history and can be viewed via GUI > Alerts > Sent Alerts. The content matches what was sent via email.

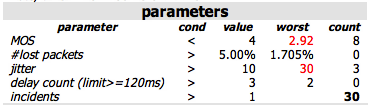

Parameters Table

The parameters table shows QoS metrics with problematic values highlighted for quick identification.

CDR Records Table

The CDR records table lists all calls that triggered the alert. Each row includes alert flags indicating which thresholds were exceeded:

- (M) - MOS below threshold

- (J) - Jitter exceeded

- (P) - Packet loss exceeded

- (D) - Delay exceeded

Anti-Fraud Alerts

VoIPmonitor includes specialized anti-fraud alert rules for detecting attacks and fraudulent activity. These include:

- Realtime concurrent calls monitoring

- SIP REGISTER flood/attack detection

- SIP PACKETS flood detection

- Country/Continent destination alerts

- CDR/REGISTER country change detection

For detailed configuration of anti-fraud rules and custom action scripts, see Anti-Fraud Rules.

Alerts Based on Custom Reports

In addition to native alert types (RTP, SIP response, Sensors), VoIPmonitor supports generating alerts from custom reports. This workflow enables alerts based on criteria not available in native alert types, such as SIP header values captured via CDR custom headers.

Limitations of Custom Report Alerts

Custom report alerts are designed for filtering by SIP header values captured as CDR custom headers. They have the following limitations:

- No "Group By" functionality for threshold-based alerts: You cannot create an alert that triggers only when multiple events from the same caller ID or called number occur. For example, you cannot configure an alert that triggers when the same caller ID generates multiple SIP 486 responses, while ignoring single isolated failures from different callers.

- Scheduled reports vs. threshold alerts: CDR Summary reports can group data by caller number or called number, but these are scheduled reports that send data on a time-based schedule (e.g., daily), not threshold-based alerts that trigger when specific conditions are met.

- No alert-level aggregation: SIP Response alerts aggregate by total counts or percentages across all calls matching your filters, but cannot aggregate or group by specific caller/called numbers within those filtered results.

Workaround:

The closest available workflow is to create a **CDR Summary daily report** that: 1. Filters by the SIP response code (e.g., 486) 2. Groups by source number (caller) or destination number (called) 3. Sends a scheduled email (e.g., every 15 minutes or hourly)

This report will show which caller numbers have generated failures, but you must manually review the data to identify patterns. The report will be sent on schedule regardless of whether any failures occurred, and there is no way to configure it to only trigger when a specific threshold per unique caller is exceeded.

Feature Request:

Alerting based on grouped thresholds (e.g., "alert if the same caller ID generates >X SIP 486 responses") is a requested feature not currently available in VoIPmonitor. If you require this functionality, submit a feature request describing your specific use case.

Workflow Overview

1. Capture custom SIP headers in the database 2. Create a custom report filtered by the custom header values 3. Generate an alert from that report 4. Configure alert email options (e.g., limit email size)

Example: Alert on SIP Max-Forwards Header Value

This example shows how to receive an alert when the SIP Max-Forwards header value drops below 15.

Step 1: Configure Sniffer Capture

Add the header to your /etc/voipmonitor.conf configuration file:

# Capture Max-Forwards header

custom_headers = Max-Forwards

Restart the sniffer to apply changes:

service voipmonitor restart

Step 2: Configure Custom Header in GUI

1. Navigate to GUI > Settings > CDR Custom Headers

2. Select Max-Forwards from the available headers

3. Enable Show as Column to display it in CDR views

4. Save configuration

Step 3: Create Custom Report

1. Navigate to GUI > CDR Custom Headers or use the Report Generator

2. Create a filter for calls where Max-Forwards is less than 15

3. Since custom headers store string values, use a filter expression that matches the desired values:

15 14 13 12 11 10 0_ _

Include additional space-separated values or use NULL to match other ranges as needed.

4. Run the report to verify it captures the expected calls

Step 4: Generate Alert from Report

You can create an alert based on this custom report using the Daily Reports feature:

1. Navigate to GUI > Reports > Configure Daily Reports 2. Click Add Daily Report 3. Configure the filter to target the custom header criteria (e.g., Max-Forwards < 15) 4. Set the schedule (e.g., run every hour) 5. Save the daily report configuration

Step 5: Limit Alert Email Size (Optional)

If the custom report generates many matching calls, the alert email can become large. To limit the email size:

1. Edit the daily report 2. Go to the Basic Data tab 3. Set the max-lines in body option to the desired limit (e.g., 100 lines)

Additional Use Cases

This workflow can be used for various custom monitoring scenarios:

- SIP headers beyond standard SIP response codes - Monitor any custom SIP header

- Complex filtering logic - Create reports based on multiple custom header filters

- Threshold monitoring for string fields - When numeric comparison is not available, use string matching

For more information on configuring custom headers, see CDR Custom Headers.

Troubleshooting Email Alerts

If email alerts are not being sent, the issue is typically with the Mail Transfer Agent (MTA) rather than VoIPmonitor.

Step 1: Test Email Delivery from Command Line

Before investigating complex issues, verify your server can send emails:

# Test using the 'mail' command

echo "Test email body" | mail -s "Test Subject" your.email@example.com

If this fails, the issue is with your MTA configuration, not VoIPmonitor.

Step 2: Check MTA Service Status

Ensure the MTA service is running:

# For Postfix (most common)

sudo systemctl status postfix

# For Exim (Debian default)

sudo systemctl status exim4

# For Sendmail

sudo systemctl status sendmail

If the service is not running or not installed, install and configure it according to your Linux distribution's documentation.

Step 3: Check Mail Logs

Examine the MTA logs for specific error messages:

# Debian/Ubuntu

tail -f /var/log/mail.log

# RHEL/CentOS/AlmaLinux/Rocky

tail -f /var/log/maillog

Common errors and their meanings:

| Error Message | Cause | Solution |

|---|---|---|

| Connection refused | MTA not running or firewall blocking | Start MTA service, check firewall rules |

| Relay access denied | SMTP relay misconfiguration | See "Configuring SMTP Relay" below |

| Authentication failed | Incorrect credentials | Verify credentials in sasl_passwd |

| Host or domain name lookup failed | DNS issues | Check /etc/resolv.conf |

| Greylisted | Temporary rejection | Wait and retry, or whitelist sender |

Step 4: Check Mail Queue

Emails may be stuck in the queue if delivery is failing:

# View the mail queue

mailq

# Force immediate delivery attempt

postqueue -f

Deferred or failed messages in the queue contain error details explaining why delivery failed.

Configuring SMTP Relay

If you encounter "Relay access denied" errors, your Postfix server cannot send emails through your external SMTP server. There are two solutions:

Solution 1: Configure External SMTP to Permit Relaying (Recommended for Trusted Networks)

If the VoIPmonitor server is in a trusted network, configure your external SMTP server to permit relaying from the VoIPmonitor server's IP address:

1. Access your external SMTP server configuration

2. Add the VoIPmonitor server's IP address to the allowed relay hosts (mynetworks)

3. Save configuration and reload: postfix reload

Solution 2: Configure Postfix SMTP Authentication (Recommended for Remote SMTP)

If using an external SMTP server that requires authentication, configure Postfix to authenticate using SASL:

1. Install SASL authentication packages:

# Debian/Ubuntu

sudo apt-get install libsasl2-modules

# RHEL/CentOS/AlmaLinux/Rocky

sudo yum install cyrus-sasl-plain

2. Configure Postfix to use the external SMTP relay:

Edit /etc/postfix/main.cf:

# Use external SMTP as relay host

relayhost = smtp.yourprovider.com:587

# Enable SASL authentication

smtp_sasl_auth_enable = yes

# Use SASL password file

smtp_sasl_password_maps = hash:/etc/postfix/sasl_passwd

# Disable anonymous authentication (use only SASL)

smtp_sasl_security_options = noanonymous

# Enable TLS (recommended)

smtp_tls_security_level = encrypt

3. Create the SASL password file with your SMTP credentials:

# Create the file (your SMTP username and password)

echo "[smtp.yourprovider.com]:587 username:password" | sudo tee /etc/postfix/sasl_passwd

# Secure the file (rw root only)

sudo chmod 600 /etc/postfix/sasl_passwd

# Create the Postfix hash database

sudo postmap /etc/postfix/sasl_passwd

# Reload Postfix

sudo systemctl reload postfix

4. Test email delivery:

echo "Test email" | mail -s "SMTP Relay Test" your.email@example.com

If successful, emails should be delivered through the authenticated SMTP relay.

Step 5: Verify Cronjob

Ensure the alert processing script runs every minute:

# Check current crontab

crontab -l

You should see:

* * * * * root php /var/www/html/php/run.php cron

If missing, add it:

crontab -e

# Add the line above, then reload cron

killall -HUP cron

Step 6: Verify Alert Configuration in GUI

After confirming the MTA works:

- Navigate to GUI > Alerts

- Verify alert conditions are enabled

- Check that recipient email addresses are valid

- Go to GUI > Alerts > Sent Alerts to see if alerts were triggered

Diagnosis:

- Entries in "Sent Alerts" but no emails received → MTA issue

- No entries in "Sent Alerts" → Check alert conditions or cronjob

Step 7: Test PHP mail() Function

Isolate the issue by testing PHP directly:

php -r "mail('your.email@example.com', 'Test from PHP', 'This is a test email');"

- If this works but VoIPmonitor alerts don't → Check GUI cronjob and alert configuration

- If this fails → MTA or PHP configuration issue

Troubleshooting Concurrent Calls Alerts Not Triggering

CDR-based concurrent calls alerts may not trigger as expected due to database queue delays or alert timing configuration. Unlike realtime concurrent calls alerts (see Anti-Fraud Rules), CDR-based alerts require CDRs to be written to the database before evaluation.

Check SQL Cache Files Queue

A growing SQL cache queue can prevent CDR-based alerts from triggering because the alert processor evaluates CDRs that have already been stored in the database, not calls still waiting in the queue.

- Navigate to GUI > Settings > Sensors

- Check the RRD chart for SQL cache files (SQLq/SQLf metric)

- If the queue is growing during peak times:

- Database cannot keep up with CDR insertion rates

- Alerts evaluate outdated data because recent CDRs have not been written yet

- See Delay between active call and cdr view for solutions

CDR Timing vs "CDR not older than" Setting

CDR-based alerts include a CDR not older than parameter that filters which CDRs are considered for alert evaluation.

- Parameter location: In the concurrent calls alert configuration form

- Function: Only CDRs newer than this time window are evaluated

- Diagnosis:

- Verify that the time difference between Last CDR in database and Last CDR in processing queue (in Sensors status) is smaller than your CDR not older than value

- If the delay is larger, CDRs are being excluded from alert evaluation

- Common causes: Database overload, slow storage, insufficient MySQL configuration

- Solution:

- Increase the CDR not older than value to match your database performance

- See SQL_queue_is_growing_in_a_peaktime and Scaling for database tuning

- Check SQLq value should remain low (under 1000) during peak load

"Check Interval" Parameter and Low Thresholds

When testing concurrent calls alerts with very low thresholds (e.g., greater than 0 calls or 1 call), consider the Check interval parameter.

- Parameter location: In the concurrent calls alert configuration form

- Function: How often the alert condition is evaluated (time window for concurrent call calculation)

- Issue with low thresholds:

- A call lasting 300 seconds (5 minutes) will show as concurrent for the entire duration

- If Check interval is shorter than typical call durations, you may see temporary concurrent counts that disappear between evaluations

- Recommendation for testing:

- Increase the Check interval to a longer duration (e.g., 60 minutes) when testing with very low thresholds

- This ensures concurrent calls are counted over a longer time window, avoiding false negatives from short interval checks

Fraud Concurrent Calls vs Regular Concurrent Calls Alerts

VoIPmonitor provides two different alert types for concurrent calls monitoring, which operate on different data sources and have different capabilities:

| Feature | Fraud Concurrent Calls | Regular Concurrent Calls |

|---|---|---|

| Data source | SIP INVITEs (realtime) | CDRs (after call ends) |

| Processing type | Realtime (packet inspection) | CDR-based (database query) |

| Aggregation ("BY" dropdown) | Source IP only (hard-coded) | Source IP, Destination IP, Domain, Custom Headers |

| Domain filtering | Not available (major limitation) | Available via SQL filter |

| Timing | Immediate (no database delay) | Delayed (requires CDR insertion) |

| Where configured | GUI > Alerts > Anti Fraud | GUI > Alerts |

| Table name | list_concurrent_calls in anti-fraud section |

Standard alerts table |

Key Differences:

- Fraud concurrent calls detect concurrent INVITEs in realtime but the "BY" dropdown only supports Source IP aggregation. This is a hard-coded limitation of the realtime detection logic designed for detecting attacks from specific IPs. Use this for attack detection where you need immediate alerts.

- Regular concurrent calls alerts use stored CDRs and support multiple aggregation options including Destination IP (Called), Source IP, Domain, and custom headers via the Common Filters tab. Use this for capacity planning, trunk/capacity monitoring, or when you need to filter by destination.

- Destination IP monitoring: If you need to alert when concurrent calls to a specific destination IP (e.g., carrier, trunk) exceed a threshold, use the regular Concurrent calls alert. The Fraud concurrent calls alert cannot filter or aggregate by destination IP.

Example use cases:

| Scenario | Recommended Alert Type | Configuration |

|---|---|---|

| Detect flooding/attack from specific source IP (immediate) | Fraud: realtime concurrent calls | GUI > Alerts > Anti Fraud, BY: Source IP |

| Monitor trunk capacity limit (destination IP threshold) | Concurrent calls | GUI > Alerts, BY: Destination IP (Called) |

| Detect domain-specific concurrent call patterns | Concurrent calls | GUI > Alerts, Common Filters: Domain |

| Detect attacks with immediate triggering | Fraud: realtime concurrent calls | GUI > Alerts > Anti Fraud |

If you have configured a concurrent calls alert and need filtering by destination IP, domain, or custom headers, verify that you are using the regular Concurrent calls alert in GUI > Alerts and not the fraud variant.

Troubleshooting Alerts Not Triggering (General)

If alerts are not appearing in the Sent Alerts history at all, the problem is typically with the alert processor not evaluating alerts. This is different from MTA issues where alerts appear in history but emails are not sent.

Enable Detailed Alert Processing Logs

To debug why alerts are not being evaluated, enable detailed logging for the alert processor by adding the following line to your GUI configuration file:

# Edit the config file (adjust path based on your GUI installation)

nano ./config/system_configuration.php

Add this line at the end of the file:

<?php

define('CRON_LOG_FILE', '/tmp/alert.log');

?>

This enables logging that shows which alerts are being processed during each cron job run.

Increase Parallel Processing Threads

If you have many alerts or reports, the default number of parallel threads may cause timeout issues. Increase the parallel task limit:

# Edit the configuration file

nano ./config/configuration.php

Add this line at the end of the file:

<?php

define('CRON_PARALLEL_TASKS', 8);

?>

The value of 8 is recommended for high-load environments. Adjust based on your alert/report volume and server capacity.

Monitor Alert Processing Logs

After enabling logging, monitor the alert log file to see which alerts are being processed:

# Watch the log in real-time

tail -f /tmp/alert.log

The log shows entries like:

begin alert [alert_name]

end alert [alert_name]

Interpreting the logs:

- If you do not see your alert name in the logs → The alert processor is not evaluating it. Check your alert configuration, filters, and data availability.

- If you see the alert in logs but it does not trigger → The alert conditions are not being met. Check your thresholds, filter logic, and verify the CDR data matches your expectations.

- If logs are completely empty → The cron job may not be running or the GUI configuration files are not being loaded. Verify the cron job and file paths.

Alert Not Appearing in Logs

If your alert does not appear in `/tmp/alert.log`:

1. Verify the cron job is running:

# Check the cron job exists

crontab -l

# Manually test the cron script to see errors

php ./php/run.php cron

2. Verify data exists in CDR:

# Check if the calls that should trigger the alert exist

# Navigate to GUI > CDR > Browse and filter for the timeframe

3. Check alert configuration:

- Verify alert is enabled

- Verify filter logic matches your data (IP addresses, numbers, groups)

- Verify thresholds are reasonable for the actual QoS metrics

- Verify GUI license is not locked (Check GUI > Settings > License)

"Crontab Log is Too Old" Warning - Database Performance Issues

The VoIPmonitor GUI displays a warning message "Crontab log is too old" when the last successful cron run timestamp exceeds the expected interval. While this often indicates a missing or misconfigured cron job, it can also occur when the database is overloaded and the cron script runs slowly.

Common Causes

- Missing or broken cron entry - The cron job does not exist in /etc/crontab or the command fails when executed

- Database overload - The cron job runs but completes slowly due to database performance bottlenecks, causing the "last run" timestamp to drift outside the expected window

Distinguishing the Causes

Use the following diagnostic workflow to determine if the issue is cron configuration vs. database performance:

Step 1: Verify the cron job is actually running

Check if the cron execution timestamp is updating (even if slowly):

# Check the current cron timestamp from the database

mysql -u voipmonitor -p voipmonitor -e "SELECT name, last_run FROM scheduler LIMIT 1"

# The last_run timestamp should update at least every few minutes

# If it never updates, the cron is not running (see Step 2)

# If it updates but lags by more than 5-10 minutes, it's a performance issue (see Step 3)

Step 2: If cron is not running at all

Follow the standard cron setup instructions in the "Setting Up the Cron Job" section above. Common issues:

- Cron entry missing from /etc/crontab

- Incorrect PHP path (use full path like /usr/bin/php instead of php)

- PHP CLI missing IonCube loader (check with `php -r 'echo extension_loaded("ionCube Loader")?"yes":"no";'`)

- Wrong file permissions or incorrect web directory path

- PHP CLI version mismatch - System CLI PHP differs from web server PHP

PHP CLI Version Mismatch Fix

Even if the cron job exists and IonCube Loader is installed for both web and CLI, the CLI may be using a different PHP version than the web server, causing the cron script to fail. This commonly occurs when multiple PHP versions are installed on the system.

Symptoms:

- Cron job exists in /etc/crontab

- PHP CLI has IonCube loader installed

- The GUI shows "Crontab log is too old" warning

- Manual command execution succeeds when using the correct PHP version

Diagnosis:

1. Check the target PHP version required by the GUI:

cat /var/www/html/ioncube_phpver

# Output may be: 81 (for PHP 8.1), 82 (for PHP 8.2), etc.

2. Check the current CLI PHP version:

php -v

# Example output: PHP 8.2.26 (the default CLI version may not match the GUI requirement)

3. List available PHP CLI versions:

ls /usr/bin/php*

# You may see: php8.1, php8.2, php8.3

Solution: Set CLI PHP Version to Match Web Server

Use the `update-alternatives` command to set the default CLI PHP version to match the web server:

# If ioncube_phpver shows "81", set CLI to PHP 8.1

sudo update-alternatives --set php /usr/bin/php8.1

# If ioncube_phpver shows "82", set CLI to PHP 8.2

sudo update-alternatives --set php /usr/bin/php8.2

# Verify the change

php -v

which php

# Should now point to the correct version

Verify the Fix:

Test the cron command manually and check IonCube is loaded with the new version:

cd /var/www/html

php php/run.php cron

# Verify IonCube is loaded

php -r 'echo extension_loaded("ionCube Loader")?"yes":"no";'

# Should output: yes

After a few minutes, the "Crontab log is too old" warning in the GUI should disappear, confirming the cron job is now running successfully.

Step 3: If cron runs but slowly (database performance issue)

When the cron job runs but takes a long time to complete, the issue is database overload. Diagnose using the Sensors statistics:

- Navigate to GUI > Settings > Sensors

- Click on the sensor status to view detailed statistics

- Compare the following timestamps:

- Last CDR in database** - The timestamp of the most recently completed call stored in MySQL

- Last CDR in processing queue** - The timestamp of the most recent call reached by the sniffer

If there is a significant delay (minutes or more) between these two timestamps during peak traffic, the database cannot keep up with CDR insertion. This causes alert/reports processing (run.php cron) to also run slowly.

Solutions for Database Performance Issues

- 1. Check MySQL configuration**

Ensure your MySQL/MariaDB configuration follows the recommended settings for your call volume. Key parameters:

innodb_flush_log_at_trx_commit- Set to 2 for better performance (or 0 in extreme high-CPS environments)innodb_buffer_pool_size- Allocate 70-80% of available RAM for high-volume deploymentsinnodb_io_capacity- Match your storage system capabilities (e.g., 1000000 for NVMe SSDs)

See Scaling and High-Performance_VoIPmonitor_and_MySQL_Setup_Manual for detailed tuning guides.

- 2. Increase database write threads**

In /etc/voipmonitor.conf, increase the number of threads used for writing CDRs:

mysqlstore_max_threads_cdr = 8 # Default is 4, increase based on workload

- 3. Monitor SQL queue statistics**

In the expanded status view (GUI > Settings > Sensors > status), check the SQLq value:

- SQLq (SQL queue) growing steadily - Database is a bottleneck, calls are waiting in memory

- SQLq remains low (under 1000) - Database is keeping up, may need other tuning

See SQL_queue_is_growing_in_a_peaktime for more information.

- 4. Reduce alert/report processing load**

Too many alert rules or complex reports can exacerbate the problem:

- Review and disable unnecessary alerts in GUI > Alerts

- Reduce the frequency of daily reports (edit in GUI > Reports)

- Increase parallel processing tasks: In

/var/www/html/configuration.php, setdefine('CRON_PARALLEL_TASKS', 8);(requires increasing PHP memory limits)

- 5. Check database query performance**

Identify slow queries:

# Enable slow query logging in my.cnf

slow_query_log = 1

long_query_time = 2

# After waiting for a cron cycle, check the slow query log

tail -f /var/log/mysql/slow.log

Look for queries taking more than a few seconds. Common culprits:

- Missing indexes on frequently filtered columns (caller, callee, sipcallerip, etc.)

- Complex alert conditions joining large tables

- Daily reports scanning millions of rows without date range limitations

- 6. Scale database architecture**

For very high call volumes (4000+ concurrent calls), consider:

- Separate database server from sensor hosts

- Use MariaDB with LZ4 page compression

- Implement database replication for read queries

- Use hourly table partitioning for improved write performance

See High-Performance_VoIPmonitor_and_MySQL_Setup_Manual for architecture recommendations.

Verification

After applying fixes:

1. Monitor the "Crontab log is too old" timestamp in the GUI

* The timestamp should update every 1-3 minutes during normal operation * If it still lags by 10+ minutes, further tuning is required

2. Check sensor statistics (GUI > Settings > Sensors)

* The delay between "Last CDR in database" and "Last CDR in processing queue" should be under 1-2 minutes during peak load * SQLq should remain below 1000 and not grow continuously

3. Test alert processing manually

# Run the cron script manually and measure execution time

time php /var/www/html/php/run.php cron

# Should complete within 10-30 seconds in most environments

# If it takes longer than 60-120 seconds, database tuning is needed

See Also

- Anti-Fraud Rules - Detailed fraud detection configuration

- Reports - Daily reports and report generator

- Sniffer Troubleshooting - General troubleshooting

AI Summary for RAG

Summary: VoIPmonitor Alerts & Reports system provides email notifications based on QoS parameters and SIP conditions. Alert types include: RTP alerts (MOS, jitter, packet loss, delay), SIP response alerts (including 408 timeout and response code 0), sensors health monitoring, SIP REGISTER RRD beta (response time monitoring), SIP failed Register beta (credential-stuffing detection), multiple register beta (accounts from multiple IPs), RTP&CDR alerts (PDD monitoring), CDR trends alerts (ASR trend-based monitoring with Offset/Range/Deviation parameters), and custom report-based alerts. CRITICAL: For percentage thresholds, the "from all" checkbox controls whether calculation uses ALL CDRs (checked) or only filtered CDRs (unchecked) - always UNCHECK when monitoring specific IP groups. External scripts enable webhook integration (Datadog, Slack). IP addresses in CDR table are stored as decimal integers - use long2ip() (PHP) or INET_NTOA() (MySQL) for conversion. Troubleshooting covers: MTA configuration, crontab setup, CRON_LOG_FILE debugging, concurrent calls alerts timing issues (SQL queue delays, "CDR not older than" parameter), and PHP CLI version mismatch (use update-alternatives to match web server PHP version).

Keywords: alerts, email notifications, QoS, MOS, jitter, packet loss, SIP response, 408 Request Timeout, response code 0, sensors monitoring, SIP REGISTER RRD beta, SIP failed Register beta, credential stuffing, brute force, multiple register beta, RTP&CDR alerts, PDD, Post Dial Delay, CDR trends, ASR, Answer Seizure Ratio, Offset, Range, Deviation, from all checkbox, percentage threshold, DEFAULT_EMAIL_FROM, crontab, MTA, Postfix, CRON_LOG_FILE, CRON_PARALLEL_TASKS, external scripts, webhooks, Datadog, Slack, long2ip, INET_NTOA, decimal IP, concurrent calls alerts, SQL queue, SQLq, fraud concurrent calls, PHP CLI version mismatch, update-alternatives, ioncube_phpver

Key Questions:

- How do I set up email alerts in VoIPmonitor?

- What types of alerts are available?

- What does the "from all" checkbox do in percentage alerts?

- How do I configure alerts for a specific IP group?

- How do I detect SIP registration floods (credential-stuffing)?

- What is the difference between SIP failed Register beta and multiple register beta?

- How do I configure CDR trends alerts for ASR monitoring?

- What are Offset and Range parameters in CDR trends?

- How do I configure the "From" address for alert emails (DEFAULT_EMAIL_FROM)?

- How do I configure external scripts for webhooks (Datadog, Slack)?

- How do I convert decimal IP addresses to dotted-decimal format?

- Why are concurrent calls alerts not triggering?

- What is the "CDR not older than" parameter?

- What is the difference between fraud and regular concurrent calls alerts?

- What does "Crontab log is too old" warning mean?

- How do I fix PHP CLI version mismatch for cron jobs?

- How do I enable detailed alert processing logs (CRON_LOG_FILE)?

- How do I troubleshoot MTA email delivery issues?